Nvidia News

-

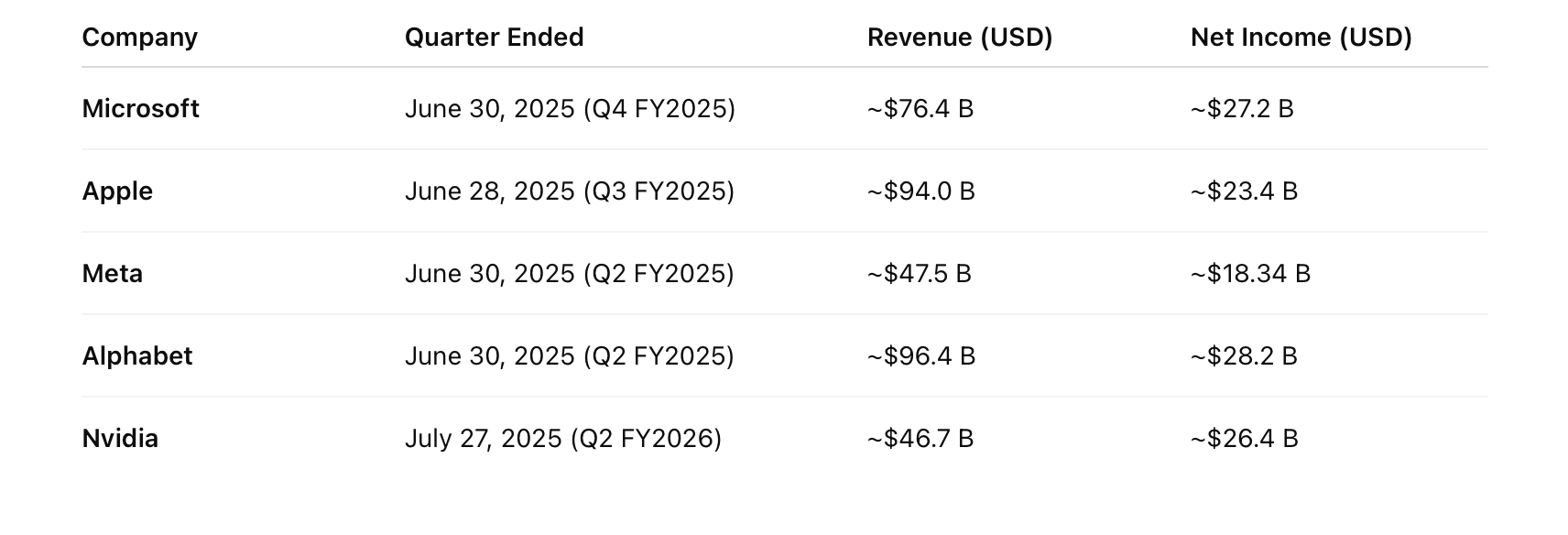

Some highlights taken from the conference call. If you look at our previous posts, we predicted a $60B print for Q3 and that is exactly what has been set up. The +8B QoQ cadence due to Blackwell Ultra has materialised. We called it when no one else did. The result is very impressive. After hours fade of $5 is nothing but option gamblers selling their hedges, nothing more. The company has delivered(and some) all the ingredients to see its stock ascension continue and imo it will. Their margins are just incredible (well over 70% and guided to rise to mid 70's). For every $10 in revenue they banked $5.65 after tax.

Here is a company trading at a fwd PE of 25 or less and continued geometric average growth in the 50% range over the next 5 years. For comparison, Palantir has a PE nearer3-400-yet 'they say Nvidia is expensive'. It is not.

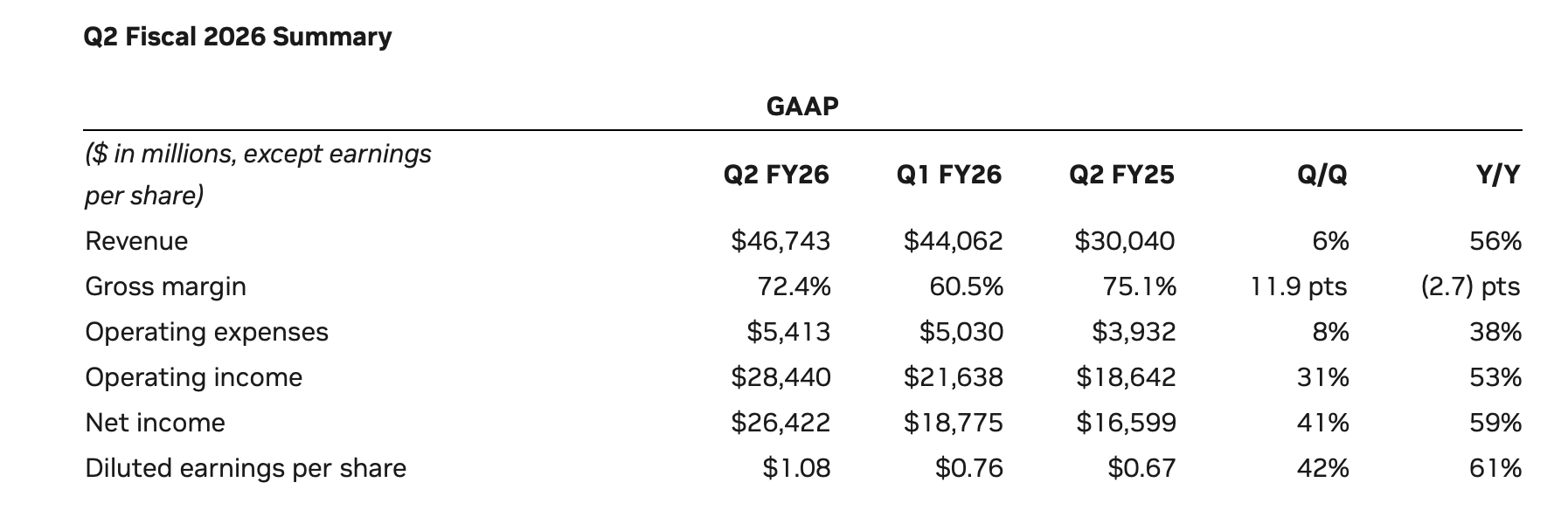

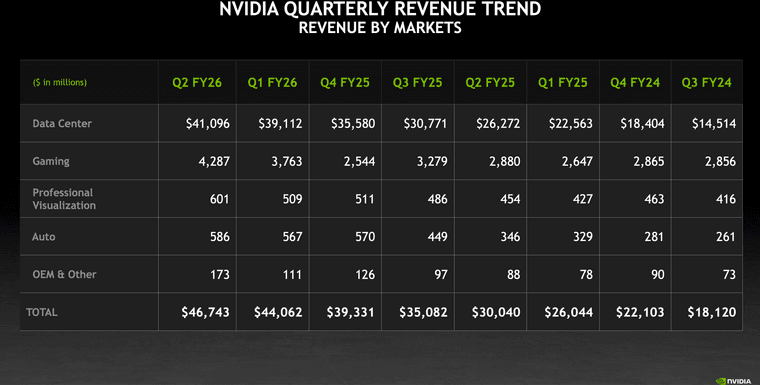

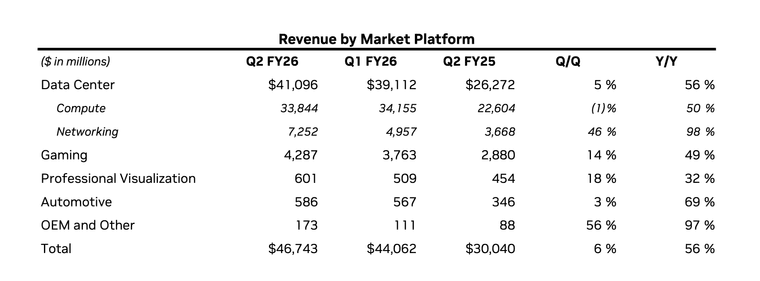

Financial Performance:NVIDIA reported record Q2 revenue of $46.7 billion, surpassing expectations, with sequential growth across all platforms. Data centre revenue rose 56% year-over-year, despite a $4 billion drop in H20 (China-specific) revenue. The Blackwell platform grew 17% sequentially, with GB300 production starting.

Non-GAAP gross margin was 72.7%, including a $180 million H20 inventory benefit(a non china customer purchased some H20). Excluding this, margins were 72.3%. Q3 revenue is projected at $54 billion (±2%), with non-GAAP gross margins at 73.5%, and full-year margins expected in the mid-70s.

NVIDIA returned $10 billion to shareholders via repurchases and dividends, with a new $60 billion share repurchase authorisation.Key Business Highlights:

Data Centre and AI Infrastructure: NVIDIA anticipates a $3–4 trillion AI infrastructure market by 2030, (super charged growth) driven by reasoning agentic AI, which demands 100x–1,000x more compute than traditional models. Blackwell’s NVLink 72 rack-scale systems deliver significant performance and energy efficiency gains, enabling a 50x increase in token-per-watt efficiency compared to Hopper. Major adopters include OpenAI, Meta, and Mistral.

China Market: Excluded from Q3 outlook due to U.S. licensing reviews, Colette Kress noted potential H20 revenue of $2–5 billion in Q3 if approvals are granted, with supply ready and more possible if demand rises. Jensen Huang estimated China’s AI market at $50 billion this year, with a 50% CAGR, driven by 50% of global AI researchers residing there. Approval for Blackwell in China is being advocated to support U.S. tech leadership.

Networking: Record $7.3 billion revenue, up 46% sequentially, with Spectrum-X Ethernet exceeding $10 billion annually. Spectrum-XGS, for giga-scale data centre interconnects, doubles GPU communication speed, enhancing AI factory efficiency.

Other Segments: Gaming revenue hit $4.3 billion (up 49% year-over-year), professional visualisation $601 million (up 32%), and automotive $586 million (up 69%), driven by Thor SoC(system on chip) for autonomous vehicles. Sovereign AI revenue is expected to exceed $20 billion in 2025, doubling from last year.Rubin Platform: Set for 2026 volume production, Rubin will continue NVIDIA’s annual cadence, introducing innovations to boost performance-per-watt, critical for power-limited data centres. Rubin is on time (contrary to rumours)

Q3 Outlook:Revenue expected at $54 billion, with Blackwell driving most of the $7 billion sequential growth. Hopper (H100, H200) remains in demand but secondary to Blackwell. Operating expenses will rise in the high 30s year-over-year to support growth.

Significance of Results:

NVIDIA’s record-breaking Q2 underscores its dominance in the AI infrastructure market, with Blackwell setting a new standard for AI inference and training. The exclusion of China from the Q3 outlook highlights geopolitical challenges, yet the potential $2–5 billion H20 revenue signals significant upside if approvals are secured, as Kress indicated is likely. This, combined with a projected 50% CAGR for the AI market, positions NVIDIA to capture a substantial share of the $3–4 trillion opportunity by 2030. The company’s full-stack approach—spanning compute, networking, and software—mitigates competitive threats from ASICs, ensuring long-term relevance across clouds, enterprises, and robotics. The focus on energy efficiency and performance-per-watt addresses critical data centre constraints, driving customer revenue and margins, and reinforcing NVIDIA’s leadership in the AI-driven industrial revolution.

-

From the Q& A session:

China Market (Vivek Arya’s Question) NVIDIA anticipates $2 billion to $5 billion in H20 chip shipments to China in Q3, pending geopolitical resolutions and additional licences. Supply is ready, with potential to scale if demand and approvals increase.

Long-term, China represents a $50 billion opportunity in 2025, with a projected 50% CAGR, driven by its status as the second-largest computing market and home to 50% of global AI researchers. Licensing Blackwell for China is seen as critical for U.S. tech competitiveness (Huang).Competitive Landscape and ASICs (Vivek Arya’s Question) Huang addressed the rise of ASICs, noting their complexity and high failure rate in production. NVIDIA’s strength lies in its full-stack AI infrastructure (been saying this for years), available across clouds, on-premises, and edge devices, supporting diverse AI models and frameworks.

Unlike ASICs, NVIDIA’s platform (including GPUs, CPUs, NVLink, and networking) offers unmatched performance per watt and dollar, driving revenue and margins for customers. This holistic approach positions NVIDIA as the preferred choice for AI factories. NB: Broadcom is a fantastic business and a great companion to the GPU KingData Centre Infrastructure Spend (Ben Reitzes’ Question) Huang projects $3 trillion to $4 trillion in global data centre infrastructure investment by decade’s end, driven by hyperscalers’ $600 billion annual CapEx (doubled in two years) and growing enterprise and cloud provider demand.

NVIDIA expects to capture ~$35 billion per gigawatt data centre (out of $50 billion–$60 billion total). Power limitations are a key bottleneck, making NVIDIA’s high performance per watt critical for maximising revenue. Only yesterday I discussed this with one of you! Power bottlenecksChina Long-Term Prospects (Joe Moore’s Question) China’s AI market is vital due to its scale and research output (e.g., DeepSeek etc). Open-source models from China fuel global enterprise and robotics adoption.

Huang advocates for U.S. companies to address this market to maintain leadership in the AI race, with hopes of licensing Blackwell for China.Spectrum-XGS and Networking (Aaron Rakers’ Question) NVIDIA’s networking portfolio (NVLink, InfiniBand, Spectrum-X Ethernet) is a growing $10 billion+ annualised business. Spectrum-XGS targets interconnecting multiple AI factories, enhancing efficiency.

Networking choices can yield significant returns (e.g., 10–20% efficiency gains equate to $10 billion–$20 billion in benefits for a $50 billion data centre). InfiniBand leads in performance, while Spectrum-X Ethernet caters to Ethernet-based data centres.Revenue Guidance Breakdown (Stacy Rasgon’s Question) Colette Kress confirmed Q3 revenue growth of over $7 billion, primarily driven by Blackwell in data centres, encompassing both compute and networking (NVLink-integrated systems). Hopper (H100, H200) remains strong but secondary to Blackwell’s dominance.

Rubin Transition (Jim Schneider’s Question) Rubin, NVIDIA’s next platform, is on an annual release cycle to boost performance per watt and dollar, enhancing customer revenues and margins. It represents a significant leap, similar to Blackwell’s over Hopper, with specifics to be revealed at GTC.

Rubin includes six new chips, already in fabrication at TSMC, and will power third-generation NVLink rack-scale AI supercomputers.AI Market Growth (Timothy Arcuri’s Question) Huang projects a 50% CAGR (right there expected go fwd growth rate-if multiples dont compress this is what the company should grow at on avg!) for the AI market, with NVIDIA’s data centre revenue expected to grow in line or better, driven by hyperscaler CapEx, AI-native startups ($180 billion funded in 2025), and enterprise adoption via open-source models.

Demand for H100, H200, and Blackwell is exceptionally high, with supply constraints noted across the industry.Closing Remarks (Huang) Blackwell delivers a generational leap, with NVLink 72 enabling massive scale for reasoning AI. Rubin is in production, targeting multi-gigawatt AI super factories.

The AI market is expanding rapidly, driven by agentic AI, enterprise adoption, and physical AI in robotics. NVIDIA sees a robust outlook through the decade, contributing significantly to the $3 trillion–$4 trillion AI infrastructure buildout. -

Next quarter net income will be > $33B and accelerating away. A 50% growth rate will flywheel earnings into the next decade.

-

Also during the call-remember the H20 15% cut to the US Govt (USG)

Nvidia has indicated that it may be able to proceed with H20 chip sales to China without handing over the 15 % revenue cut recently proposed by the U.S. government. CFO Colette Kress emphasised that, while the company holds approved export licences, “I don’t have to do this 15 % until I see something that is a true regulatory document.” To date, there is no formal law or regulation codifying the requirement, leaving Nvidia under no legal obligation to comply.

The expectation could well be a roll-back back or modified approach (DT has form here), given its shaky legal footing and widespread criticism, particularly as China has raised security concerns and paused adoption of the H20. The situation remains fluid but by no means locked-in.

My thoughts are, given they are raising this in an overt manner-no they won't end up paying. I also think once Rubin is out, Nvidia will get approval to sell Blackwell into China.

-

Selling the less efficient, less powerful versions. China won't be stopped just slowed down so the US may as well profit from it. Profit = more money for innovation and taxes too

On the numbers, it looks, broadly speaking like we are now on a 60/70/80/90/100 rhythm . The margins are exceptional and in the future, software will continue to play a big part so I don't see margins falling any time soon. Not in a meaningful way.

AMDs PE is 35% higher than Nvidia on a Fwd basis and 100% higher on a trailing basis. AMD margins are 32% lower. AMDs problem is no Cuda and inability to scale out inside a data centre nor can they scale across data centres. Even based on what analysts think Nvidia EPS(earnings) growth over the next several years is 50% MORE than AMD. So whilst you could make a case for AMD as an investment with a PEG of approx 1.4 which is not expensive but at the same time not attractive, Nvidia has a PEG of about 0.7.

-

This is in regards to Blackwell chips

-

Jensen tells Su 'Hold my Beer'

AMD has been strategically designing its Instinct accelerators to close the gap with NVIDIA’s AI dominance, aiming to meet or exceed NVIDIA’s anticipated roadmap.

With the MI300X/MI325X, AMD leveraged chiplet-based designs on TSMC’s 5nm/6nm nodes, offering competitive FP8 performance for AI training and inference. The upcoming MI450 (Helios, Q4 2026), built on TSMC 3nm with CDNA 4, was positioned to challenge NVIDIA’s expected Rubin architecture by targeting up to 35x MI300X performance (sparse FP8), enhanced Infinity Fabric for better multi-GPU scaling, and superior power efficiency.

AMD’s roadmap, including the MI500 (2027, 256-GPU racks), aimed to scale to 50,000–100,000 GPUs(still below Nvidia's 1 million but it was a competitive effort), capitalising on chiplet cost advantages and TSMC CoWoS supply (with Broadcom) to erode NVIDIA’s 80–90% AI market share.

Reports suggest AMD’s strategy was to match NVIDIA’s 2026–2027 performance while undercutting on price and power, especially for hyperscale training and enterprise inference. (note it's always next year!).

However, NVIDIA’s Rubin CPX, announced on 9 September 2025, throws a spanner in the works. This GPU, optimised for AI inference “prefill” (context-building for million-token models), delivers 30 petaFLOPS of NVFP4 compute with 128GB GDDR7, four NVENC/NVDEC units, and 3x faster attention processing than Blackwell Ultra. Paired with standard Rubin GPUs (HBM4-equipped) in the NVL144 CPX(hybrid) rack (8 exaFLOPS, 144 GPUs), it offers a disaggregated approach that slashes inference costs by up to 4x while boosting TCO efficiency.

NVIDIA’s redesign of Rubin (upping power to 2,300W) directly counters MI450’s expected specs, suggesting NVIDIA anticipated AMD’s move. The CPX’s specialised inference focus disrupts AMD’s unified training/inference strategy, as MI450/MI500 lack a comparable disaggregated design. NVIDIA’s NVLink 5.0 and CUDA ecosystem further enable scaling to 1 million GPUs, far beyond AMD’s current 10,000–20,000 GPU ceiling.

In short, AMD’s chiplet-based roadmap was on track to challenge NVIDIA’s 2026 performance, but Rubin CPX’s inference optimisation and NVIDIA’s scaling prowess put AMD’s plans in tatters, forcing it to accelerate software (ROCm) and interconnect improvements to stay competitive. Both also being considerably inferior.

The take away is, Nvidia is the King, they clearly have many secret weapons to deploy as and when to counter any competitors claimed advancements. And I say claimed, because all AMD have is a slide deck-products they will produce in the future.

Industry experts hail NVIDIA’s Rubin CPX as a breakthrough for AI inference, praising its ability to handle massive context windows with lightning-fast throughput. Analysts call it a major leap for video, code generation and long-sequence tasks, suggesting it could reshape efficiency and unlock new AI capabilities across data-intensive applications.

-

Yang went on to say they don't have enough capacity as visibility of 'orders' is through 2027 'and it's massive'. The company is opening new factories to meet demand.

As noted previously, Nvidia will be limited by CoWoS-L capacity, which is expanding at a rate of approx 250k chips per Q give or take or 8-10 billion(per Q). We believe the inflexion point is here....60/70/80/90/100 quarterly revenues.

-

China tells technology companies to stop buying all nvda ai chipsets

Hmm hopefully a buying opportunity and not a change of trend direction in the stock

Eta -

Looking likely that the buying opportunity was short lived. Algos bought the dipOnwards and upwards Rodney

-

China’s directive is an escalation but not an outright ban on NVIDIA chips. It’s a strategic move in the U.S.-China tech rivalry, with limited immediate impact on NVIDIA’s valuation due to its reduced reliance on China. The “ploy” theory is plausible but secondary to China’s broader goal of domestic chip dominance.

It will be resolved in time and im sure even more powerful chips will continue to be sold into China, legally and otherwise. China will fall further behind without Team Green. Fact.

The valuation of Nvidia has zero China component baked in.

-

Breaking-Nvidia invest $5B into intel and sign deal to co develop chips. Intel up 30%. NVDA up $5 :). the details are coming in.

-

here is the news article:

Interesting-I guess TSM are so constrained this makes perfect sense. It also rescued intel from almost certain misery. It also broadens Nvidia reach, into the PC market-more growth vectors and will have AMD worried for sure

And I just had a look at AMD and yes, the market is not liking it at all. Down 5%

And I just had a look at AMD and yes, the market is not liking it at all. Down 5%Nvidia (NASDAQ:NVDA) and Intel (NASDAQ:INTC) announced a major deal on Thursday to co-develop PC and data centre chips. Concurrently, Nvidia revealed it would take a $5B stake in Intel.Nvidia shares increased 2.2% in pre-market trading, while Intel’s jumped 32%. If Intel shares open at that level, it would be their highest in over a year.The partnership will utilise Nvidia’s NVLink, merging Nvidia’s artificial intelligence and accelerated computing expertise with Intel’s x86 architecture. Intel will produce Nvidia-custom x86 CPUs for data centres and supply x86 system-on-chips integrated into Nvidia’s RTX GPUs for the PC market.Additionally, Nvidia will invest $5B in Intel’s common stock at $23.28 per share, subject to standard closing conditions and regulatory approvals, the companies stated.

-

Nvidia continues to make moves.

NVDA has invested over $900M in a strategic deal with Enfabrica, a US-based AI hardware startup, securing key staff, including CEO Rochan Sankar, and licensing its cutting-edge networking technology. The transaction, finalised last week, involves cash and stock payments but is not a full acquisition.

Enfabrica’s Accelerated Compute Fabric (ACF) technology connects over 100,000 GPUs, enabling large-scale AI clusters to operate as a single system, reducing GPU idle time by up to 50% and slashing costs.

Nvidia aims to integrate this into its AI infrastructure, enhancing data centre efficiency for training large language models and supporting “AI factories” with partners like Microsoft. This strengthens Nvidia’s dominance in AI networking, giving it a competitive edge by optimising GPU-centric workloads. The deal, echoing Nvidia’s $7B Mellanox acquisition, avoids regulatory hurdles while bolstering its full-stack AI solutions for hyperscalers and enterprises.

-

More on the Intel deal.

Nvidia and Intel have unveiled a partnership to develop custom x86 CPUs for Nvidia’s AI infrastructure platforms, integrating Intel into Nvidia’s ecosystem.

This collaboration merges Nvidia’s AI and accelerated computing expertise with Intel’s CPUs, targeting a USD 50 billion opportunity in data centres and PCs. Nvidia’s GPU chiplets will enhance Intel’s offerings, bringing AI computing closer to users.

While Nvidia dominates AI-accelerated computing and GPUs, custom chip trends and competition are reshaping the semiconductor landscape. Supply chains are evolving dynamically, and the market is expanding and adapting!

This partnership is noteworthy in the context of advancing computing power and AI’s economic impact. Nvidia’s Jensen Huang emphasised, “Together, our companies will build custom Intel x86 CPUs for Nvidia’s AI infrastructure platforms, bringing x86 into Nvidia’s NVLink ecosystem.” Currently it is solely, Arm based.

Both CEOs expressed optimism, focusing on innovation rather than politics or competitors, describing the collaboration as “historic.”The Verge reported that Nvidia and Intel will connect their architectures via Nvidia’s NVLink system, used in data centres to link GPUs. Huang noted, “Intel will build Nvidia-custom x86 CPUs for integration into our AI platforms, offering greater optionality for advancing AI workloads.” And clearly advance NVLink making it even more pervasive! It also heads off any potential expansion of the Asics market (Broadcom). Great move by Huang and Co

This fusion targets the USD 30 billion CPU data centre market, aiming to create rack-scale AI supercomputers.The partnership also extends to the PC market, leveraging the annual 150 million notebook market. Huang highlighted the innovative use of TSMC’s foundry for Nvidia’s GPU chiplets, combined with Intel’s CPUs through multi-technology packaging. This “mix and match” approach enables rapid innovation and complex system development

-

more news on allowing tier 1 silicon into China.......I think it's coming

David Sacks, White House AI & Crypto Czar, urged the U.S. to reassess its export control policies in light of China’s advancements in AI technology. In a post on X, Sacks highlighted Huawei’s launch of a new AI chip to compete with Nvidia and China’s directive to its companies to avoid purchasing certain Nvidia AI chips. He argued that China’s ability to produce its own chips shows it is not dependent on U.S. technology and aims to compete globally. Sacks warned that restrictive export controls could push countries towards China, risking America’s lead in the AI race. He noted Huawei’s strategy of clustering chips to compensate for weaker performance and criticised bureaucratic delays that benefit Huawei. Sacks advocated for allowing U.S. companies to sell technology abroad with security measures, particularly to allies, to maintain a competitive edge and prevent China from dominating the AI and semiconductor markets.

-

Breaking news

Nvidia just unleashed a massive $100 billion deal with OpenAI, announced 10 mins ago.

It’s not just a cash dump—think of it as a mega partnership where Nvidia’s pumping in up to $100 billion over time to power OpenAI’s huge AI setup, loaded with at least 10 gigawatts of Nvidia’s silicon.

Basically, OpenAI’s taking that money and pouring it right back into Nvidia’s GPUs, solidifying Nvidia’s grip on the AI compute game.Nvidia’s boss, Jensen Huang, called it “the next big leap,” hyping their decade-long collaboration vibe from the DGX days to ChatGPT blowing up. OpenAI’s Sam Altman is buzzing, saying, “It all starts with compute,” picturing this as the backbone for an AI-driven economy.

They’re talking proper game-changers—AI factories for next-gen models like GPT’s successors.

It’s a proper power move against AMD and big players like Google with their custom chips, while giving OpenAI a head start over Anthropic or xAI. Intel’s $5 billion deal looks like small fry next to this.

Batter up!

-

Details will emerge but yes Nvidia are buying a big piece of OpenAi

To build 10GW will require 7M Blackwell ultra equiv chips and cost $300 billion so one can only assume that the $100B is capital which will then be leveraged with debt. 7M chips is more than all life to date GPUs sold. it's 100k racks. Crazy