Nvidia News

-

Hi C,

Just the usual misinformation.

Jensen Huang made the following comment 4 weeks ago. I'll take his word for it. He's a very reliable/honest source.

"Blackwell is in full production, and the ramp is incredible. Customer demand is incredible and for good reason."

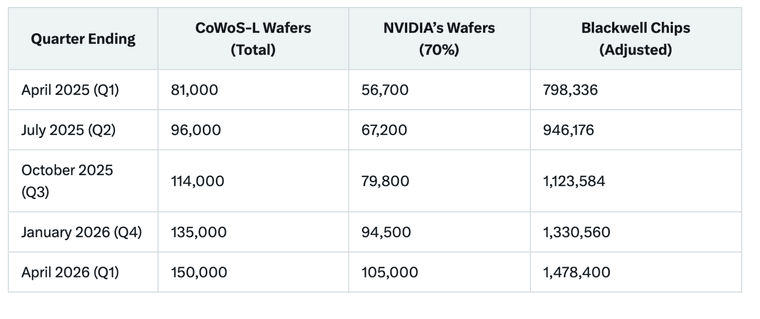

As we have mentioned. The most critical process in the supply chain is packaging (CoWoS-L packaging) and TSMC are doing a very good job of ramping capacity, as planned or better. You can see my table, detailing the approx ramp and chip yield.

It's worth pointing out that with limited CoWoS and the China H20 consuming this precious resource-given the H20 ban, the accelerated transition to B300 could very well be because they have abandoned the H20 packaging which allows 'CoWoS-S lines to be tooled for 'L'.

What appears to be a negative is in fact a positive. Nvidia want to keep the Chinese customers happy but if their hands are tied, this change is most likely a net gain because the B300 has margins twice as high as the H20. This is my take.

In a matter of days now we will get to hear from SMCI which will add some colour to the wider landscape.

-

Jensen Huang, Nvidia’s chief executive, is urging the Trump administration to revise AI diffusion policies, particularly export restrictions on AI technology. Speaking in Washington on 30 April 2025, he called for policies to “accelerate the diffusion of American AI technology” globally, criticising Biden’s 15 May 2025 framework that limits advanced AI chips and model weights to non-allied nations like China.

Huang highlighted China’s rapid progress, noting Huawei’s advanced AI chips, and stressed the need for US competitiveness. He endorsed Trump’s domestic manufacturing push, pledging Nvidia’s potential $500 billion AI infrastructure investment, and advocated for an energy policy to support AI’s electricity demands.

Huang’s influence may yield results. Nvidia’s pivotal role in AI and Huang’s prior engagements with Trump, led to a pause on curbing Nvidia’s H20 chip exports to China, which earned $16 billion in Q1 2025. The administration appears open to adjusting rules, possibly using chip exports in trade talks. However, challenges persist.Lawmakers, including House China committee leaders, demand stricter controls, citing security risks from Chinese firms like DeepSeek exploiting loopholes. Given Nvidia’s clout and the administration’s flexibility, Huang has a fair chance of securing targeted policy changes, such as export exemptions, but a complete overhaul of the framework seems less likely amid security and trade concerns.

-

Nvidia is back to developing AI chips specially made for China, after facing tighter U.S. export restrictions that blocked sales of its high-end processors.

The company had previously created slightly downgraded chips like the A800 and H800 to get around earlier rules, but those were eventually banned too. Now, Nvidia’s rolling out new products — the HGX H20, L20, and L2 — that meet the latest U.S. guidelines while still keeping business ties in China.

These new chips are toned-down versions of Nvidia’s top-performing H100, aimed at staying within legal limits while continuing to serve major Chinese clients. CEO Jensen Huang has stressed that Nvidia will keep working closely with the U.S. government to stay compliant, all while trying to hold onto its slice of China’s massive AI market.

-

you may be wondering why they'd bother or why China would buy an even less powerful chip when apparently Huawei is so good.

AI developers in China overwhelmingly rely on frameworks built around CUDA: PyTorch, TensorFlow, and many enterprise-grade AI solutions.

Huawei’s chips (like Ascend) use a different architecture (e.g., based on MindSpore, their own AI framework), which is not yet as mature or widely supported.

So even if Huawei’s hardware is close in performance, software compatibility, tools, and ecosystem support matter more in many real-world applications.Nvidia full stack is already ingrained in their data centres so it's very very difficult to switch-it's the same reason why AMD is an also-ran.

Second-it's fake news that Huawei can produce commercial quantities. Double masked, poor yield and power hungry.

-

Nvidia H20 Chip Modification

Nvidia is modifying its existing H20 chip, rather than developing a new one, to comply with US export restrictions for the Chinese market.

The modified chip is expected to be ready by July 2025, allowing Nvidia to resume sales in China. The $5.5 billion reserve set aside due to the initial export ban in April 2025, which halted H20 sales and impacted inventory and commitments, is likely to be reversed in subsequent quarters as Nvidia adapts to the new regulations and resumes shipments.This news is highly relevant to recent discussions about news and articles and of course, reaction to same.

The headline 'Nvidia lose China', cue a slew of new opinions. All wrong-again. As soon as the export ban came in Huang flew out to China (the following day). Obviously he knew it was coming, he sits at the presidents table. The $5.5B provision, under GAAP is required because certainty to the contrary wasn't present at the time and it was inside their April 27th quarter end.

They may or may not amend that reserve for Q1 but most of it will unwind in Q2 and Q3.

What is also interesting. Nvidia have pre announced earnings misses twice (2011 and 2022) both 8 days after the Q end and on a Monday. That would correspond with May 5th. We haven't heard anything about a miss. A $5.5B hit not causing an earnings miss? (impossible, right?).

Perhaps we give it until next Monday-special company which is very much misunderstood by most. And frankly, hated by those that missed out

-

I did read somewhere a day or two ago (sorry can't provide a source, think it was the Telegraph) that Nvidia chips were being acquired on the black market by China.

Is there evidence of this and if so are the quantities sufficient enough to have an impact on the supply/market/share price etc?

-

I'm sure it happens. To the extent some would have you believe, no. What chip is highly valuable-Blackwell. It's much much faster than Hopper. Blackwell chips are in super-high demand and allocation to even big US customers is tight, so where exactly would China get these from. Hopper, I suppose they can but is that a big problem for the 'AI Race'. I don't think so. Hopper will be much slower.

The Nvidia chip restriction debate is layered with conflicting perspectives. Huawei’s CloudMatrix 384 AI cluster, powered by Ascend 910C chips, is designed to rival Nvidia’s high-end AI systems like the GB200 NVL72. It's inefficient and expensive but it is powerful enough to get the job(any AI job) done. What it lacks is CUDA which will def slow them down.

China won't be stopped, rather slowed down. And that is the aim. It will actually push the US to keep innovating and investing

When all is said and done, China will keep buying Nvidia chips through legal and other channels due to the software stack. It's a nice market to have for sure but if it slows down(unlikely) it's not material when you look at the rest of the World.

I noticed a day or so ago that Open AI (Stargate) are offering OpenAI for countries. A fully built DC stack for Sovereign purposes with US Government backing. A turn-key solution.

-

Baidu’s Robotaxis Are Coming to Europe – and It’s Great News for NVIDIA

Baidu is getting ready to launch its Apollo Go robotaxi service in Europe, starting with trials in Switzerland by the end of 2025. It’s a big step—not just for Baidu, but for the entire autonomous driving industry. If all goes well, we could see self-driving cars on European roads sooner than many expected.

One of the key players behind the scenes is NVIDIA. Baidu’s robotaxis run on NVIDIA’s advanced autonomous tech, likely using the DRIVE Orin platform—and possibly the new DRIVE Thor in the near future. That’s a big boost for NVIDIA, which is already dominating the AI hardware space.

This launch isn’t just a test run—it’s a sign that robotaxis are moving from niche tech to real-world transport. Baidu has already scaled up in China, and if it can overcome Europe’s stricter rules and more complex roads, it could roll out hundreds or even thousands of vehicles across the continent.

For NVIDIA, it means more demand for its chips and a stronger foothold in the future of mobility. For everyone else, it’s one step closer to catching a self-driving ride to work.

-

An update on the $5.5B H20 provision

NVIDIA’s ability to absorb a $5.5 billion provision for H20 chip export restrictions in April 2025 without issuing a profit warning strongly suggests they performed exceptionally well in Q1.They have always issued warnings in the past and their timing of same supports the conclusion that they will not need to because they did so well. I'm staggered to be honest-assuming this holds(and the evidence is there). This would need to be a beat of $10B+. Did they guide $41B and managed $50B.

-

Bloomberg reported that the Trump administration is close to sorting out a deal to let the UAE bring in 500,000 Nvidia AI chips every year until 2027. This comes after scrapping the Biden-era rules that limited AI chip exports.

It’s all part of a bigger push to boost tech ties with Gulf countries, helping the UAE ramp up its AI game while keeping an eye on US security concerns.

Assuming a fully built system(rack) this is approval to spend $30 billion annually (60k per chip) or 7,000 racks at todays prices.

I call this 'Fully Loaded Cost Allocation' where we aggregate all Nvidia rack costs and apportion it to the GPU count. A rack is not just a GPU. It requires a CPU/DPU/Switch etc.

-

It's worth reposting an old slide showing the approx ramp up of supply. Today various sources suggest Nvidia has a known back-log or committed orders of 9.5GW of data centre. with Huang stating that 1 GW is $40-$50B in revenue. That's half a trillion dollars.

The industry is moving to a describing the scale of installation by Megawatts and Gigawatts. So in the above example, 500k GB300 would be rated at:

140KW/rack (72 x 1.4Kw GPU) +CPU/memory etc

500k/72 =circa 7,000 racks

7,000 x 140 =980 megawatts or 0.98 GWFrom the slide you can clearly see the constraint and how supply will not catch up to Demand for 'years'-it's not possible to accelerate the capacity much faster than the indicated cadence (CoWoS-L). What we can take away from this, is demand is colossal, it's not slowing and there is multi year committed growth.

There will always be clunky quarters here and there but the trend is for QoQ revenue expansion as far ahead as one can see(into 2027)

-

Another $200 Billion Deal

5GW AI Data Center Complex: G42 is spearheading the development of one of the world's largest AI data centres complexes in Abu Dhabi.

The facility is planned to span 10 square miles, initially operating with 1 gigawatt (GW) of power and eventually expanding to 5GW. This capacity is sufficient to support over 4 million next-generation Nvidia GB200 chips equivalents. The project aligns with the UAE's ambition to diversify its economy and position itself as a global AI leader.Total Blackwell production in 2025 is estimated to be anywhere from 4-5M

It isn't slowing down!

-

Unfortunately prices are already very expensive. It's a capital intensive, modest margin business which is not easy to scale(without capital). I'm not so keen to pay PEs of 40+ and 25% revenue growth. There are over a dozen very big and fierce competitors too. It's a great business but it's not at a fair price imo.

We chose Oracle because of their moat-their ERP database/clients. Mission critical data used by the biggest companies in the world and most companies. When they get into AI Oracle Cloud will get the business for one simple reason. They will not move this data to anyone else due to security, access and their very survival if something serious happens.

Oracles enormous outstanding performance obligations (growth) proved that point well.

-

Unfortunately prices are already very expensive. It's a capital intensive, modest margin business which is not easy to scale(without capital). I'm not so keen to pay PEs of 40+ and 25% revenue growth. There are over a dozen very big and fierce competitors too. It's a great business but it's not at a fair price imo.

We chose Oracle because of their moat-their ERP database/clients. Mission critical data used by the biggest companies in the world and most companies. When they get into AI Oracle Cloud will get the business for one simple reason. They will not move this data to anyone else due to security, access and their very survival if something serious happens.

Oracles enormous outstanding performance obligations (growth) proved that point well.

@Adam-Kay said in Nvidia News:

Unfortunately prices are already very expensive. It's a capital intensive, modest margin business which is not easy to scale(without capital). I'm not so keen to pay PEs of 40+ and 25% revenue growth. There are over a dozen very big and fierce competitors too. It's a great business but it's not at a fair price imo.

Sorry to be slow but can someone re-explain that paragraph using simple words? Thanks.

-

The infrastructure (rather than the IT) of Data Centres costs loads to build

I think

-

Hi O,

Capital Intensive = requires investment in plant and machinery to grow revenues.

P/E is a quick snap shot of a companies stock price vs its earnings/share). It's not the only metric to look at obviously, but in this case it's high and if I were to pay a premium id want to see more growth and better margins.

A company like KLAC also requires capital to grow however they have much better margins (65%) and an almost monopoly in certain key areas. And its growth rate isn't all that different-its PE is almost half! As I said, many more things to look at. Hope this helps

-

In an interview on CNBC last night, Elon Musk confirmed that Tesla's Optimus robot system is powered by Nvidia's Jetson Orin and Thor chips. This endorsement highlights Nvidia's critical role in Tesla's humanoid robotics, reinforcing their leadership in AI hardware and potentially boosting their stock and investor confidence

Notable robotics companies using NVIDIA systems:

Tesla

Siemens

BYD Electronics

Teradyne Robotics

Intrinsic (Alphabet)

Boston Dynamics

Agility Robotics

XPENG Robotics

Figure AI

Fourier Intelligence

Sanctuary AI

1X Technologies

Apptronik

Unitree

Addverb

Ati Motors

Ottonomy

Vention

Standard Bots

KUKA

Universal Robots

Foxconn

Waabi

Neura Robotics

Skild AI

Virtual Incision

Galbot

Hillbot

IntBot

Market TAM Coverage(Total Addressable Market): NVIDIA's systems are integral to a wide range of robotics applications, from humanoid robots to industrial automation and autonomous vehicles. Given the diversity and prominence of these companies across manufacturing, healthcare, logistics, and automotive sectors, NVIDIA likely captures ~80-90% of the robotics AI hardware and software market TAM.

Musk also mentioned his interest in acquiring UBER-no doubt for their best in place ride booking system and network (another heavy user of Nvidia drive systems).

The company is dominating what are clearly going to be massive markets-the future looks very bright. Robotics and autonomous vehicles.

-

Quanta, the AI server giant, sees triple-digit growth for AI server sales this year, and said capacity is now full, media report, citing Senior VP Mike Yang: -GB300 server shipments likely to start in September, Q4 mass production -GB200 server shipments began in March -H200 servers still lead in Q1, will continue in Q2

Interesting Quanta are reporting their biggest seller(still) being the older architecture H200. I would have expected Hopper to now be behind Blackwell. This is good news because Hopper is in plentiful supply. Further, they are fully booked. Note: Quanta is an OEM(like Foxconn), producing generic reference designs for large CSPs called 'Big Iron'. Quite distinct from the fully custom designs demanded by the AI model operators and built by the likes of SMCI (an ODM)

Elon Musk said xAI plans to build a 1-million GPU facility outside Memphis, Tennessee and will buy more chips from Nvidia and AMD, and possibly others, CNBC reports, adding Musk said xAI already has “over 200,000 GPUs training coherently” at its Colossus data centre in Memphis. “A few years ago, I made a very obvious prediction, which is that the limitation on AI will be chips,” he said. This is additional GPUs which fits with the planned Grok expansion of 2M GPUs by end of 2026.

Malaysian Deputy Minister of Communications Teo Nie Ching said in a speech on May 19 that Malaysia would be the first to activate an unspecified class of Huawei “Ascend GPU-powered AI servers at national scale”, but a day later, on May 20, Teo’s office said it was retracting her remarks on Huawei without explanation. It is unclear whether the project will proceed as planned.

Maybe the US had something to do with that because it now breaks US law yo use Huawei chips-shame .

-

Just seen some news re Oracle investing 40B in Nvidia chips …is that old or new news