Nvidia News

-

Reports are circulating that Huawei’s Ascend AI chips, notably the Ascend 910C, are struggling to gain traction in China’s AI market, with some calling them subpar. The chips are reportedly plagued by overheating issues, which is a big red flag for reliability and has put off major Chinese tech firms, many of whom are Huawei’s rivals and wary of adopting its tech. Huawei’s software, the Compute Architecture for Neural Networks (CANN), is also lagging behind NVIDIA’s CUDA platform. CUDA’s well-established ecosystem and developer-friendly tools create a lock-in effect, making it tough for Huawei to compete, as CANN struggles with compatibility and performance. While U.S. restrictions on NVIDIA’s H20 GPUs have given Huawei a slight edge in China, its global ambitions are hamstrung by sanctions and supply chain woes. Production yields for the 910C, built on SMIC’s 7nm process, are reportedly stuck at around 30%, limiting scalability. Despite Huawei’s claims that the 910C rivals NVIDIA’s H100, these issues—overheating, weaker software, and geopolitical barriers—mean it’s got a steep hill to climb to challenge NVIDIA’s dominance in the AI chip race.

Don't count out the new Nvidia B20 (H20 replacement). Imo Nvidia is very much still in the China market. It's also a reminder-CUDA is king, not just the chip. 7nm vs TSMC 2nm/5nm and yields at 90%. Maybe Huawei needs some DLC

-

JH is arriving in Europe this weekend and is due to meet many Heads of State as well as present at a couple of conferences. Likely we will hear of further Sovereign AI/Token factory initiatives next week! There could be a giant EU project brewing to rival Stargate.

-

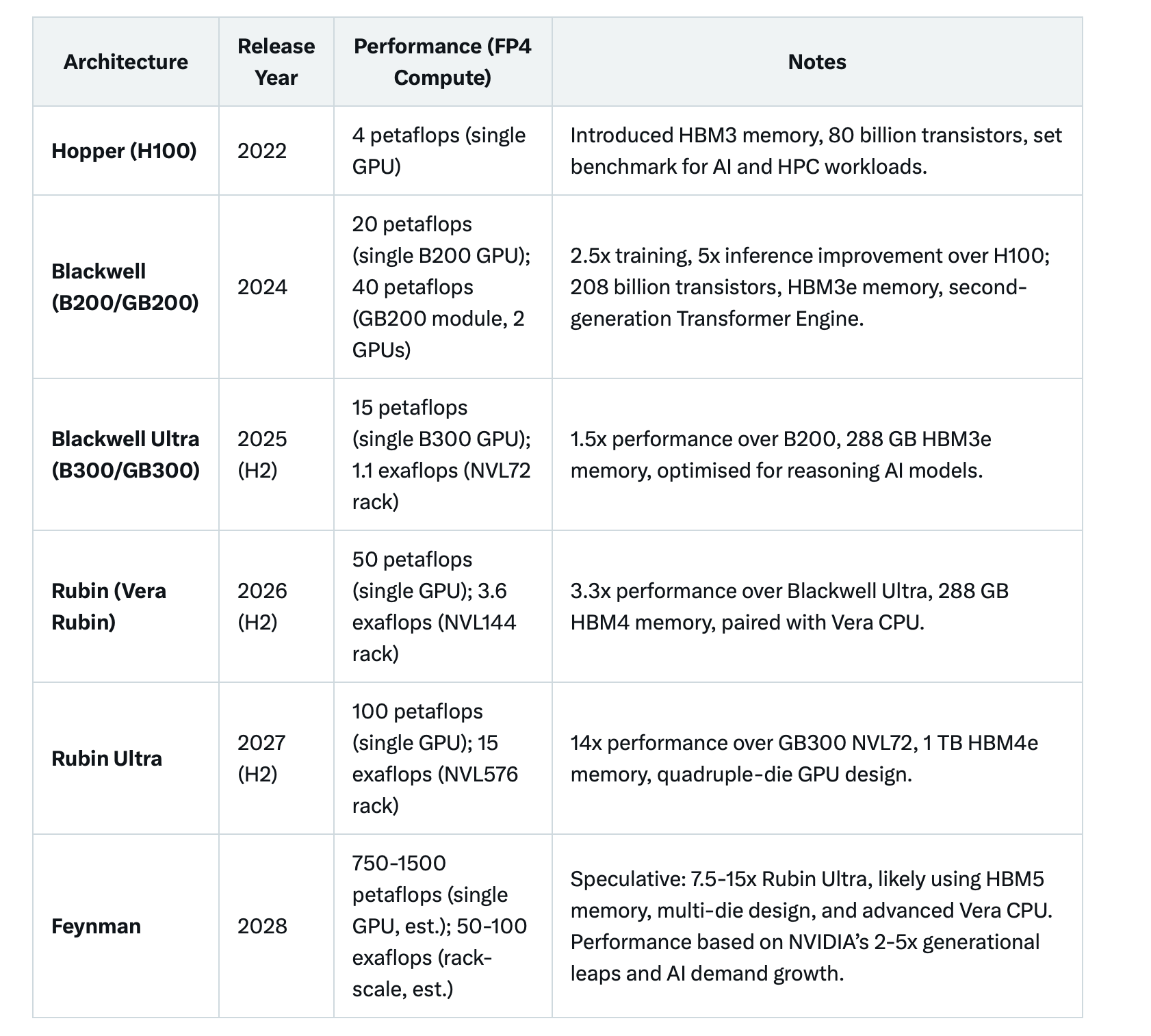

Nvidia’s next-gen Rubin GPU and Vera CPU chips will finish tape-out(the final stage of the design process) at TSMC in June and begin trial production, with sample chips in September, earliest, media report, citing unnamed supply chain sources. TSMC will make Rubin on N3P and CoWoS-L advanced packaging.

Sample chips will then be delivered to their ODM partners to start incorporating them into their various product designs. The time line, which would suggest mass production starting in the Q2/2026 range is approx 9 months ahead of their previously publicises roadmap-a staggering speed of architectural progress.

Given the R200 is rated at 1.8KW per chip I would expect full sized racks to have a TDP (thermal Design Power) of up to 180KW/rack and cost somewhere between $5-$6 million.

Note: The Rubin chip is packaged on the same CoWoS-L process as Blackwell which means we will not see the clunky ramp observed during the Hopper to Blackwell transition. Further, existing cooling systems (CDU rated to 250KW) allows built in headroom to manage thermals which should mitigate any cooling issues.

-

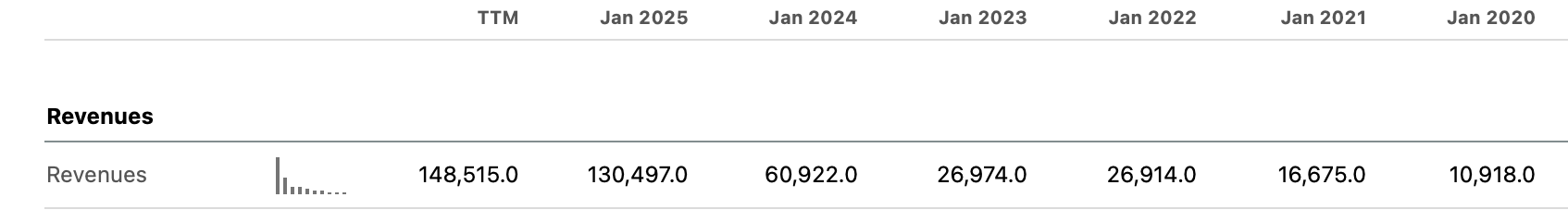

Hi ExIM

Actually the annual performance increase is much more creating exponential performance gains. By 2028 we should see up to 700X more performance that GB200 today. By 2031 about 1 million X. This is what Jensen is driving at when he says 'I want to reduce the cost of inference to almost zero'. You have machines that are so powerful they produce vastly more tokens for the same cost. Whilst there are other costs like power and bandwidth bottlenecks resulting in non linear cost drops, you will see todays 10 cents/1000 token cost fall to '.001 cents' or less. There is a direct relationship between Nr of tokens and the complexity of the given problem(to solve).

In AI, a token is a small unit of text, like a word, punctuation mark, or part of a word, that the system processes.

How is token generation growing:

Microsoft processed over 100 trillion tokens, a ~5× increase YoYGoogle’s monthly token volume increased by ~50× in the past year

AI Agents (“super agents”):

Per Barclays, AI super-agent users might consume 36 million to 356 million tokens/year per user

-

Today

1 Billion? I didn't expect much given the state of our books.

Leaders he says....: The European Commission, led by President Ursula von der Leyen, announced the InvestAI initiative at the AI Action Summit in Paris . This initiative aims to mobilise €200 billion for AI investments across Europe over the next five years.

-

Ian Buck, NVIDIA's Vice President of Hyperscale and High-Performance Computing, made the comment....."You're only seeing a small glimpse in the public papers of what the true behind the scenes world class work has actually been able to do," about AI during a fireside chat at the Bank of America Global Technology Conference.

His statement, highlights that the public and even industry observers are only privy to a limited view of AI's current capabilities. This suggests that significant advancements, likely in areas like AI model performance, scalability, or novel applications, are being developed in private by leading tech companies, research labs, or NVIDIA itself. Buck's role at NVIDIA, a key player in AI hardware and software, implies he’s referring to cutting-edge work enabled by their technology, such as advanced GPU architectures or AI frameworks like CUDA.

I think this makes a lot sense and is very interesting. Why else would the industry pile a trillion USD+ into it. The unprecedented surge in spending and a race to scale.

BTW GPT 5 is due out during the summer.

-

Jensen is presenting a keynote in Paris this morning (10am). Expect some tasty DC deals with our European neighbours to drop.

-

Mistal and Nvidia re building an AI cloud-breaking news. Nvidia CEO talking to Macron(Paris later)

-

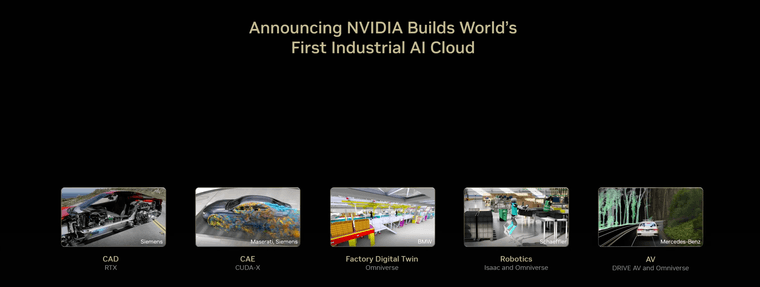

separated Jensen teased, stating full details will be announced on Friday. This is part of their Industrial Revolution 2.0 where all industries will be transformed . Designed in the Universe and automated via Robotics and Autonomous Vehicles (anything that moves). Be in no doubt. Heavy industry, design and logistics will change and Nvidia is a the very heart of it all.

-

-

Interesting thoughts from JH

We know demand is massive -this number equates to around 30GW. I thought 15GW. Obviously they can't supply it right now but they will over the next few years. Slow down, anyone?Asics are the TPUs used by AWS/GOOG and to an extent Meta. They are chips designed for one task only. Generally, whilst the market is big in $ terms, GPU $ is about 12X that of Asics . That won't change. Jensen thinks his GPUs are actually more cost effective, even when performing the tasks Asics chips were designed to do.

-

During GTC France this week Jensen mentioned that he would announce some data centre deals relating to Europe on Friday. Being the stalker that I am, I see he met German Chancellor Friedrich Merz. I'm speculating but this could be a massive German initiative, multi billions. Let's see what drops

-

Nvidia Europe DC deals announced today. When fully scaled it's quoted as 2GW across 4 countries incl Germany, Italy France, UK. Details are emerging but we know that 1GW is 40-50 billion.

-

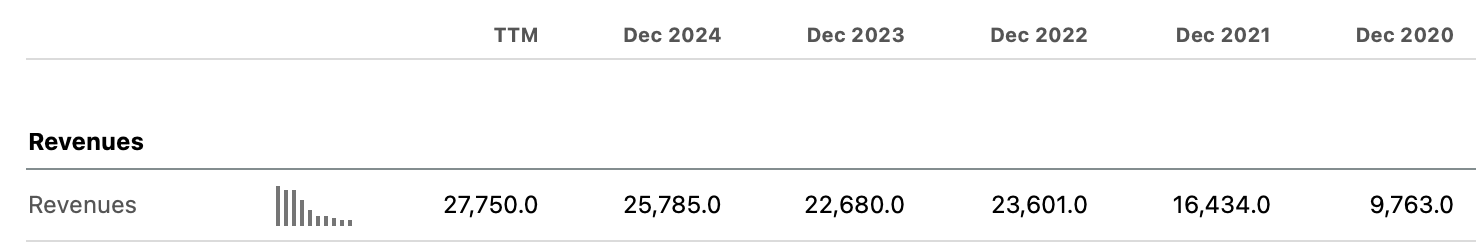

Why we won't be rushing to buy AMD

. Not sure about their math 3-4% and 400 billion.

. Not sure about their math 3-4% and 400 billion.AMD claim GPU as fast as Nvidia (next year. .)-a lab chip which they can't make in volume, demand is weak due to 'No Cuda' and can only be networked to 10k chips vs Nvidia 100s of thousands. As we have said before 1 chip is meaningless . How good is your total solution. Still years behind.

I find it baffling why some investors hold both stocks. AMD are not a credible competitor imo

-

The Nvidia B40 (also known as the RTX Pro 6000D) is being positioned as the replacement for the H20 GPU in China, following U.S. export restrictions on advanced AI chips. It is based on Nvidia's Blackwell architecture and uses GDDR7 memory, allowing it to comply with trade controls.

Availability: Production began in June 2025, with general rollout expected in July and throughout Q3. Technically this will catch the current quarter((Q2) being May-July so potential for additional revenue.

Price: Estimated at $6,500–$8,000 USD per unit, making it more affordable than the H20, which exceeded $10,000 USD.

Sales potential: Analysts expect B40 shipments could reach $1–3 billion USD in revenue per quarter, depending on demand and licensing constraints. -

I see that AMD are giving it large at a conference in California….the report goes on and poses the question is it the end for Nvidia ….

-

AMD like many other companies in the sector like their marketing spin. Today AMD has somewhere between 3-5% market share in the DC.

Are their chips poor. No they are good. However what are we seeing out in the wild. A few chips, 100s? Try 200k and soon 1M in the data centre. AMD have no real networking ability. They can not scale out their clusters such that the DC acts as one giant GPU-all talking to one another. For small (a few nodes) they work just fine but giant systems, nope.Further, where are these chips. On a slide deck and in a lab. Nothing they have produced to date is remarkable. And remember one thing. AMD valuation is more expensive than Nvidia based on accepted metrics. They have lower growth and lower margins-so why buy the stock rather than buy Nvidia. AMD will grow and the stock will appreciate over time, I have no doubt but it's a follower-table scraps is my opinion.

Software Ecosystem (Most Important)

CUDA: Proprietary, mature, and widely adopted GPU computing platform.

Thousands of optimised libraries (cuDNN, cuBLAS, TensorRT, etc.).

Seamless support for AI frameworks (PyTorch, TensorFlow, JAX).

Massive developer community(millions who prefer CUDA because end customers want CUDA) and industry-standard tooling.

AMD ROCm is open-source but underdeveloped by comparison—missing features, poorer documentation, and inconsistent support across models and frameworks.Networking and Scaling

Nvidia supports hundreds of thousands of GPUs in a single cluster (e.g., Selene, Jupiter).

Uses NVLink, NVSwitch, and Infiniband (via Mellanox) for ultra-low latency, high-bandwidth scaling.

NCCL (communication library) scales efficiently and is deeply integrated into Nvidia's stack.

AMD supports a few thousand GPUs max per cluster. No NVLink equivalent. Infinity Fabric is decent within nodes, but poor between nodes.Tooling and Developer Support

Nvidia offers:

Nsight Systems & Compute for profiling

CUDA-GDB for debugging

Triton Inference Server for deployment

Mature support in all major ML and HPC stacks.

AMD tooling is basic and lacks the polish, breadth, and deep integration needed for industrial-scale projects.Vertical Integration

Nvidia offers:

THE FULL STACK

Omniverse, CUDA, and cuOpt for simulation and optimisation

Delivers full-stack solutions from chip to cloud, ready to deploy.

AMD offers EPYC + Instinct chips but no full-stack equivalent—relies heavily on partners and lacks an ecosystem play.Market Trust and Ecosystem Lock-in

Enterprises, research labs, and hyperscalers have built years of infrastructure on Nvidia.

Switching is costly—not just financially, but in engineering effort and risk.

Nvidia also provides enterprise-grade support, training, and long-term stability.

AMD’s competitive silicon is often overlooked due to software and ecosystem gaps.Conclusion: Nvidia is 5–7 Years Ahead

CUDA alone puts Nvidia 5+ years ahead in developer adoption and ecosystem maturity.

In networking, software, and scalability, AMD has only recently started catching up—but is still 5–7 years behind in real-world deployments.As we discussed a year ago-the DC is the land grab and Nvidia have put their stake in the ground-to displace them would need 'trillions' and a system that was much better. You will hear 'AMD are catching up' yes, to old Nvidia tech. Nvidia are not standing still are they. In fact I think the gap is getting bigger.

Unless AMD makes massive coordinated progress in software, interconnects, and cloud enablement, this gap will persist.

-

And taking all of that into account, AMD still need to make the chips. TSMC and MU seems pretty busy making everyone else's chips so AMD will be severely constrained for the next several years

Enough said. PE multiples are pretty much the same. Growth is not!

And for some insight into investment decisions. What should keep Nvidia out in front, assuming we take Huang out of the equation. A visionary leader who has not put a foot wrong. It's very easy to say we will take him on and win.

With all the money they make what are they doing with it? Spending more on R&D, partnering or buying the best complimentary tech(companies) and hiring the best talent. If you are top of your class at MIT would you rather work for Nvidia or AMD-Nvidia have the best toys and I doubt money is the primary motivator but they prob pay more too. I believe Nvidia offer employees very generous/discounted share incentives, 22 weeks maternity, free food in their offices, health benefits and industry leading salaries and a choice of very high end office furniture. Whatever helps to keep the tech-bros happy

The bigger picture is Nvidia is massive in so many segments, robotics, autonomous vehicles, omniverse, health and human sciences. Quantum. You rarely hear anything about AMD-well, apart from their self promotion. Im not worried about AMD in the slightest.