-

Just stating the obvious. Ive factored it down to 10 for simplicity.

-

-

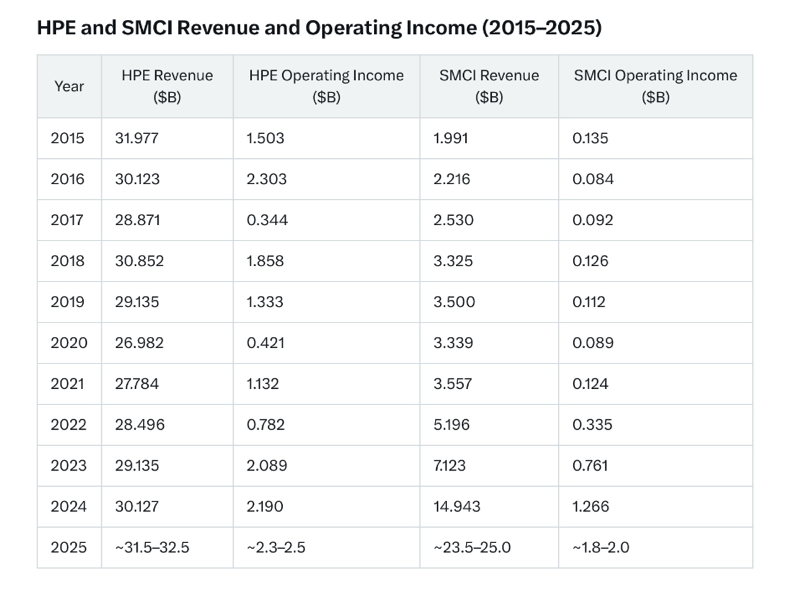

A good example of relative values, growth and investment choices. HPE Hewlitt Packard Enterprises. The number two server company in the world. NB: HPE do not manufacture any servers-like Dell they white label their products, sourced from Wiwynn, Foxconn, Quanta etc.

For 10 years HPE has sat still, yet look at SMCI. Once a mere minnow, they will surpass HPE this year and have Dell in the cross hairs.

The main differences between SMCI and the 'competition' is that SMCI build custom solutions whereas HPE/Dell sell reference designs (a generic box.

You walk into a Bar and buy a Dell/HPE-the bartender hands you a vanilla martini.

You walk into a bar and ask for a supermicro and out comes the 'Savoy Cocktail Book'. Adds in a few extra GPUs, a splash of DLC, a twist of HBM, served in a titanium chassis.There is no money in reference designs because it's generic an a commodity. Hyperscalers use these to run the internet and other general work loads.

Companies like Xai/Tesla/Anthropic/AWS/Meta/Google/Msft if they want to run LLMs or systems designed for specific tasks, such as drug discover, science, robotics, they will need a custom solution. Not a 'Big Iron' quarter horse.

-

A Positive update from SMCI CEO Charles Liang at HumanX https://youtu.be/csPUkLm2xY8?si=5uOuS_g7tWIis8Mf

-

Thanks Bogie . I watched the interview yesterday. There is no question imo, smci existing 2k racks/m capacity(and 3k this year) which is currently utilised at circa 500/m(25%) will not be enough within 12 months.

-

The take aways from the interview is simply 'Growth'. Other companies are laying off staff, SM is hiring. They are expanding their San Jose Campus materially and adding two new campus in the Mid-West and east coast, plus scaling up Malaysia.

We will see the step change in growth start to materialise, latest Q1 July-Sep quarter

-

SMCI is growing rapidly as per the revenue figs earlier in this thread , I was just reading an article on AI and Nvidia potential over next few years here https://www.nextplatform.com/2025/03/14/ai-infrastructure-spending-is-the-boom-chemical-or-nuclear/

So long as the demand for Nvidia compute (and networking) grows then SMCI, as Nvidia's lead manufacturing partner are perfectly positioned to grow with it

-

Hi Bogie,

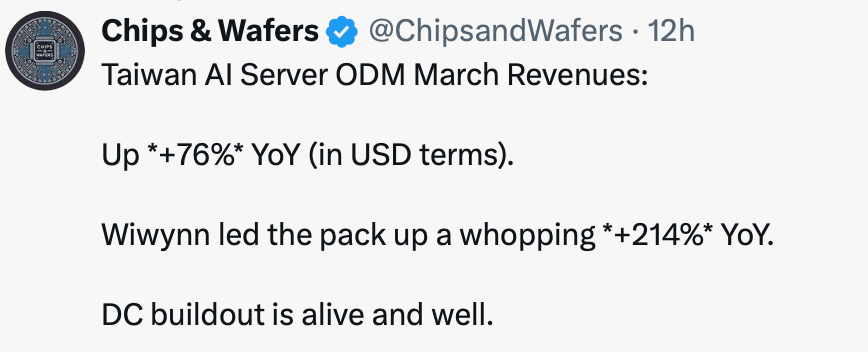

I now take the view that demand is a given. Posting news of all the new data centres being built seems somewhat redundant. It's all about supply, so my focus is on CoWos-L expansion.

The fact is, AI investment is locked in for the next several years and nothing can derail that. Not even the naysayers questioning 'but what if it hits a limit(in efficiency)'. The 'what if' is simply used to create doubt and is the latest suggestion. I guess when you suggest as Cathy Wood did 'it's all pull fwd and over ordering ' 'it's a blip in demand and will revert to past revenue generation'-wrong and wrong. AMD is coming-wrong-even Intel said they would 'destroy Nvidia'-sure they did.

Anyway, back on track

SMCI needs Chips-that is all they need. They have the customers. More customers more demand than they can fill. If they had the chips today they would not report 5B/Q-a reminder, this is up from 1.5B per quarter only 2 years ago or less. They could report 15B/Q.

SMCI needs Chips-that is all they need. They have the customers. More customers more demand than they can fill. If they had the chips today they would not report 5B/Q-a reminder, this is up from 1.5B per quarter only 2 years ago or less. They could report 15B/Q.

We have Dell sounding desperate-claiming they 'won' all Xai business. Completely false. And then report much lower margins-because they don't manufacture their own servers. Asked last week(Liang) 'are you working on Colossus-2, because Dell say it's their, Charles Liang responded ' well, we are building a new campus nearby to support it. We believe we will be working on Xai expansion'. -

Keep an eye on CoWoS-L expansion. It is pivotal. SM revenue is tied to Nvidia revenue which is tied to CoWoS. Yes, ongoing innovation into robots, ADAS, medicine is all very interesting but over the next 18 months the story is 100% CoWoS. Nvidia is a decade ahead of everyone else because they have the best solution. Not just a chip, it's an entire system.

The moment Blackwell/Blackwell Ultra ramps up to meaningful supply which I believe will next next quarter, 250k Q4, 750k Q1, 1 million? Q2(May through July), SM will receive meaningful supply and its revenue will move up materially to the 8B range and continue on a +2B quarterly cadence from there. Just as I expect Nvidia revenue 'this Q' to be circa $50B and increase every quarter (this year) around +$10B. This compares with +$4B last year. They can not do any more because they are constrained. They are constrained because the equipment needed to make more takes 12-18 months to build.

-

Eviden, a division of the Atos Group, has partnered with Super Micro to distribute SMCI’s AI SuperCluster solutions, powered by NVIDIA’s GB200 NVL72 technology, across Europe, India, the Middle East, and South America. This collaboration leverages Eviden’s core business in digital transformation—spanning advanced computing, digital security, AI, and cloud services—and its 57,000 employees based in Bezons, France, with operations in over 53 countries.

Eviden holds a leading global position in advanced computing, notably as the provider of Europe’s first exascale supercomputer, JUPITER.

Eviden is the market leader in its field with group global revenue of 8b euro

-

Musk’s xAI Bolsters £100 Billion AIP Initiative, Super Micro Computer Likely Involved

20 March 2025 – In a bold move to cement its dominance in the artificial intelligence (AI) race, Elon Musk’s xAI has joined the AI Infrastructure Partnership (AIP), a consortium initially launched with a $30 billion commitment that has now escalated to a staggering $100 billion with debt financing included. The partnership, originally formed by BlackRock, Global Infrastructure Partners (GIP), Microsoft, and MGX in September 2024 under the name Global AI Infrastructure Investment Partnership (GAIIP), welcomed NVIDIA and xAI as key players this month, further solidifying its technological clout.The AIP aims to deploy this colossal investment immediately into AI infrastructure, focusing primarily on state-of-the-art data centres and innovative energy solutions to power them. With a spotlight on the United States and its OECD partner nations, the initiative is set to drive economic growth and technological breakthroughs, positioning the US as a global leader in AI innovation. Partners like GE Vernova and NextEra Energy are also collaborating to enhance supply chain efficiency and deliver high-efficiency energy solutions, amplifying the project’s scope.

Speculation is rife that Super Micro Computer Inc. (SMCI), is likely involved in this ambitious project. Although not officially named as a core AIP member, SMCI’s longstanding partnership with NVIDIA—one of AIP’s newest additions—makes its participation highly plausible. Known for supplying cutting-edge servers crucial for AI data centres, SMCI has already played a pivotal role in Musk’s xAI projects, including the development of advanced AI systems. Analysts suggest that SMCI could serve as a key supplier, capitalising on AIP’s “open architecture” approach to bolster the infrastructure buildout.

Musk, never one to shy away from bold statements, has framed this move as a counter to rival initiatives like Project Stargate, a £385 billion ($500 billion USD) data centre venture. The AIP’s rapid mobilisation of funds and partners underscores the escalating global competition for AI supremacy, with the US aiming to outpace state-driven efforts in places like China.

As the AI Infrastructure Partnership accelerates its plans, all eyes are on how this $100 billion injection will reshape the technological landscape. For now, the synergy of Musk’s xAI, NVIDIA’s expertise, and SMCI’s hardware prowess hints at a formidable alliance in the making. -

After being very quiet about their AI plans, Apple has finally started moving. It has been reported that Apple have ordered 250 GB300 NVL 72-the flagship Blackwell Ultra racks @$4M each. Suppliers are jointly Dell and Supermicro. Nice!

-

Nice win for SMCI-their designs are better than the rest.fact. The MLPerf benchmark is the AI gold standard. Topping the league is a big draw and it shows real engineering leadership snd innovation. Di to all the arm chair experts who say it’s all the same generic hardware. Oh really

Super Micro Computer Inc. (SMCI) has leveraged its custom-designed 250kW cold plates as a cornerstone of their record-breaking performance in the MLPerf Inference v5.0 benchmarks, announced on 3 April 2025. These cold plates are integral to the liquid-cooled 4U NVIDIA HGX B200 8-GPU system, enabling it to handle the intense thermal demands of eight NVIDIA B200 GPUs, each with a thermal design power (TDP) of up to 1200W when liquid-cooled.

The 250kW rating refers to the cooling capacity of the in-rack coolant distribution unit (CDU) that works in tandem with these cold plates, more than doubling the cooling capability of previous generations while maintaining the same compact 4U form factor. This allows SMCI to dissipate heat efficiently, ensuring the GPUs operate at peak performance without thermal throttling, a key factor in their topping the MLPerf scoreboard.

The design of these 250kW cold plates is a bespoke innovation by SMCI, featuring advanced tubing and a layout optimised for heat transfer. Unlike traditional air-cooling, which struggles with such high-power densities, these liquid-cooling plates directly contact the GPUs, absorbing and transferring heat to the coolant, which is then circulated out via vertical coolant distribution manifolds (CDMs). This setup not only boosts performance—delivering over three times the tokens per second for models like Llama3.1-405B compared to the H200—but also enhances energy efficiency, a critical edge in modern AI data centres. The packaging of trays within the system complements this, allowing dense GPU integration and easy servicing, further amplifying SMCI’s ability to outpace competitors in this benchmark round.

Servers from…. This MLPerf round, 15 partners submitted stellar results on the NVIDIA platform, including ASUS, Cisco, CoreWeave, Dell Technologies, Fujitsu, Giga Computing, Google Cloud, Hewlett Packard Enterprise, Lambda, Lenovo, Oracle Cloud Infrastructure, Quanta Cloud Technology, Supermicro, Sustainable Metal Cloud and VMware.

Note Foxconn and Quanta white label servers for dell/hpe/fujitsu/cisco/levono and others. The tests also used google TPU and AMD products.

-

Bodes well. We will find out in a week or so

-

We should hear from management, perhaps tonight after hours re an earnings date. I'm not expecting an early announcement(prelim business update) because the guide was very wide $5B-$6B and I would only expect an update IF they are outside this range.

The CEO made some interesting comments yesterday. Today, we are preparing for next generation DLC-2 liquid cooling solution roll-out at customer sites and aiming to lead the industry to achieve over 30% DLC adoption in 2025.

Now, we could think it's just hot air however previously the comment was 'up to 30%' and in light of the recent issues, anything he says is subject to a high level of scrutiny and review by the Board-he has said repeatedly of late 'I have to be careful what I say'.

I believe they have a unique solution. It is the most efficient system on the market and Blackwell supply is much higher now-eagerly awaiting a record guide for Q4. We want to see a guide of at least $1B more than any previous and ideally 1.5B which is what I would expect them to actually achieve. We are now in the zone to see quarter on quarter 1.5B incremental growth.

-

DLC-2 likely includes newly developed cold plates and a 250kW coolant distribution unit (CDU), enabling it to handle the thermal demands of NVIDIA’s Blackwell GPUs, which consume significantly more power (and generate a lot of heat)than prior generations.

DLC-2 by SM was the number 1 rack as tested by ML Perf the consortium of AI leaders who test equipment.

We believe Meta is a 'whale' customer of SMCI and will be taking deliver of this rack in the coming weeks/months

-

SMCI released a business update yesterday.

“During Q3 some delayed customer platform decisions moved sales into Q4,” As such, Super Micro's GAAP and adjusted gross margins were 220 basis points lower than in the second-quarter, largely due to “higher inventory reserves resulting from older generation products and expedite costs to enable time-to-market for new products.”

Preliminary adjusted earnings per share for the period will be between $0.29 and $0.31 per share, below the $0.53 per share estimate. Net sales are expected to be between $4.5B and $4.6B, below the $5.35B estimate.

Super Micro swill host a conference call on May 6 to discuss the results and Q4 GuideMy notes explaining the shift below. I'm keeping an open mind and will digest what they report next week. The initial Blackwell delay (end of 2024) setting up a whip-saw effect when the company subsequently moved quickly to the next generation of chip to catch up on a performance basis (B200-B300), leaving a demand vacuum for lower powered chips.

GB200 Delays: Initial Blackwell delays forced customers to wait or rely on Hopper, leading to a buildup of GB200 orders in SMCI’s backlog.

GB300 Announcement: The early GB300 reveal, with superior performance, prompted some customers to delay GB200 orders, anticipating GB300 availability in late 2025. This created a “demand vacuum” where customers deferred purchases, expecting better technology soon.

Hopper-to-Blackwell Transition: As customers shifted from Hopper to Blackwell, SMCI faced inventory write-downs for older H100/H200 stock, as demand for these products waned. Expedite costs to rush B200/GB200 production further eroded margins.

Customer Behaviour: Tier 2 cloud service providers (CSPs) and enterprises, in particular, scaled back Hopper purchases in late 2024 and delayed GB200 orders, awaiting GB300 allocations in mid-2025.NVIDIA’s Roadmap Disruption: The rapid shift from GB200 to GB300, coupled with Rubin’s early timeline, created a demand vacuum that disrupted SMCI’s order flow. Customers delaying GB200 purchases for GB300

Supply Chain Challenges: Blackwell delays and the need to redesign for GB300’s 1,400W TDP and liquid cooling requirements strained SMCI’s operations, leading to expedite costs and inventory issues.

Customer Delays: SMCI noted that some customers needed more time to complete DLC data centre buildouts, which delayed order fulfillment. This is consistent with the high power and cooling demands of Blackwell systems.Alignment with Blackwell (GB200/GB300) Demand:NVIDIA’s Blackwell GPUs, particularly the GB200 (available now) and GB300 (slated for 2H 2025), have high thermal design power (TDP) requirements, with the B300 chip at 1,400W. These GPUs necessitate advanced cooling solutions like DLC to manage heat efficiently in dense AI server racks.SMCI’s leadership in DLC, evidenced by deployments like xAI’s Colossus (100,000+ GPUs), positions it to capture significant market share as hyperscalers and cloud service providers (CSPs) ramp up Blackwell-based data centres in 2H .Liang’s >30% DLC projection aligns with the expected surge in GB200/GB300 deployments, as customers who delayed orders in 1H 2025 (awaiting GB300 or completing data centre buildouts) are likely to accelerate purchases in 2H CY2025.

NVIDIA’s Rubin architecture, reportedly ahead of schedule for 2H 2026, will likely push TDPs even higher(Thermal Design Power), requiring advanced DLC solutions. SMCI’s >30% DLC target in 2025 sets the stage for sustained leadership as Rubin ramps in 2026–

-

Based on this info should we see a good next quarter …

-

Yes we should. We are def looking for QoQ restoration of the story

-

This is DLC-2-the ability to deliver racks > 250Kw. We believe the primary customers for this are Meta and Xai