Micron Technology

-

Micron appoints Mark Liu to their board- Mark spent 30 years at TSMC and was Chairman and Co-CEO. He is a titan in the industry and it's a big deal for Micron. He was instrumental in TSMC becoming the worlds best foundry. He resigned in 2024 and handed the reigns over to C.C Wei(at TSMC).He will bring deep expertise to the company. Micron is up 8% today

-

Micron’s 50% DRAM Outsourcing Boost Signals Major HBM Push

Micron Technology has ramped up its outsourcing of DRAM chip packaging and testing by 50% with Taiwan-based partner Powertech Technology, a move that underscores its aggressive pivot towards High Bandwidth Memory (HBM) production. This strategic shift, reported in early 2025, allows Micron to offload a significant portion of its DRAM workload, freeing up its own manufacturing lines to focus on the burgeoning HBM market—projected to soar from £3.1 billion in 2023 to over £19.5 billion by 2025.

The implications are clear: by contracting out this additional DRAM capacity, Micron is accelerating its HBM expansion at a critical time. HBM, prized for its high-speed performance in AI and high-performance computing applications, is in skyrocketing demand, and Micron aims to increase its market share from 10% to 20-25% by mid-2025. This outsourcing decision not only optimises its production capabilities but also signals strong confidence in HBM’s profitability, especially as the company’s latest quarterly net sales hit £6.8 billion, with HBM revenue doubling.

Industry analysts see this as a savvy play. With a new £5.5 billion HBM-focused facility in Singapore set to launch in 2026, and plans for HBM4 and HBM4E rollouts in 2026 and 2027/2028 respectively, Micron is positioning itself to challenge South Korean leaders SK Hynix and Samsung. The freed-up capacity could help Micron meet commitments like supplying HBM3E 8H memory for Nvidia’s Blackwell architecture, showcased at CES 2025 with a blistering 1.8 terabytes per second bandwidth. Moreover, with £4.8 billion in US CHIPS Act funding secured in December 2024, Micron’s domestic and global manufacturing footprint is expanding rapidly.

This 50% DRAM outsourcing hike is more than a logistical tweak—it’s a bold statement of intent. By prioritising HBM over traditional DRAM in its own facilities, Micron is betting big on the AI-driven future, potentially reshaping the competitive landscape of the memory industry.

Source: TechPulse -

Last night Micron (MU) reported their fiscal Q2/2025 earnings.

Revenue $8.05B, +38.3% Y/Y! (beat by $150M), and EPPS $1.56 (beat by 14c).

CEO Sanjay Mehotra (a brilliant engineer) said 'Micron delivered above guidance and data centre revenue is up 300% from 1 year ago. We are extending out technological lead with our 1-gamma DRAM nodes. We expect record quarterly revenue in Q3'

The outlook is a guide of 8.8B

Comments from the conference call:

HBM memory grew +50% sequentially to over $1B this quarter. HBM shipments were ahead of our plans and we are the only company globally who has shipped low power DRAM into the data centre in high volumes. Momentum is building. We expect Q3 to be another record.

Our 1-beta DRAM leads the industry and we are extending that leadership further with the launch of our 1-gamma node.

In January, we broke ground on an HBM advanced packaging facility in Singapore and we are aiming to production at scale in 2027.

As GPU and AI accelerators performance continues to increase, these high performance processors are starving of memory bandwidth. HBM is the bandwidth needed and we are very excited about the growth opportunities. It is a highly complex and highly valuable product category where out customers recognise Micron as the HBM technology leader.

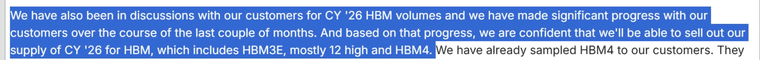

All 2025 HBM production has been pre-sold and we are already signing agreements for our planned 2026 supply.

Most of our H2 2025(second half) shipments will comprise our new '12-high' HBM3E (blackwell ultra!) Looking ahead we are enthusiastic about of HBM4 for candour 2026 which is aligned to our customer requirements (Rubin)

Our NAND SSD 9550 is approved for NV72 GB200

We see promise in the automotive sector-memory and storage content in cars continues to increase as AI enabled in-car infotainment systems become more enriched. Advanced robotaxi platforms today contain over 200 GB of DRAM

-

MU asserting their leadership. Interesting that Samsung, a formidable force in technology hasn't even validated their offerings. Left in the dust.

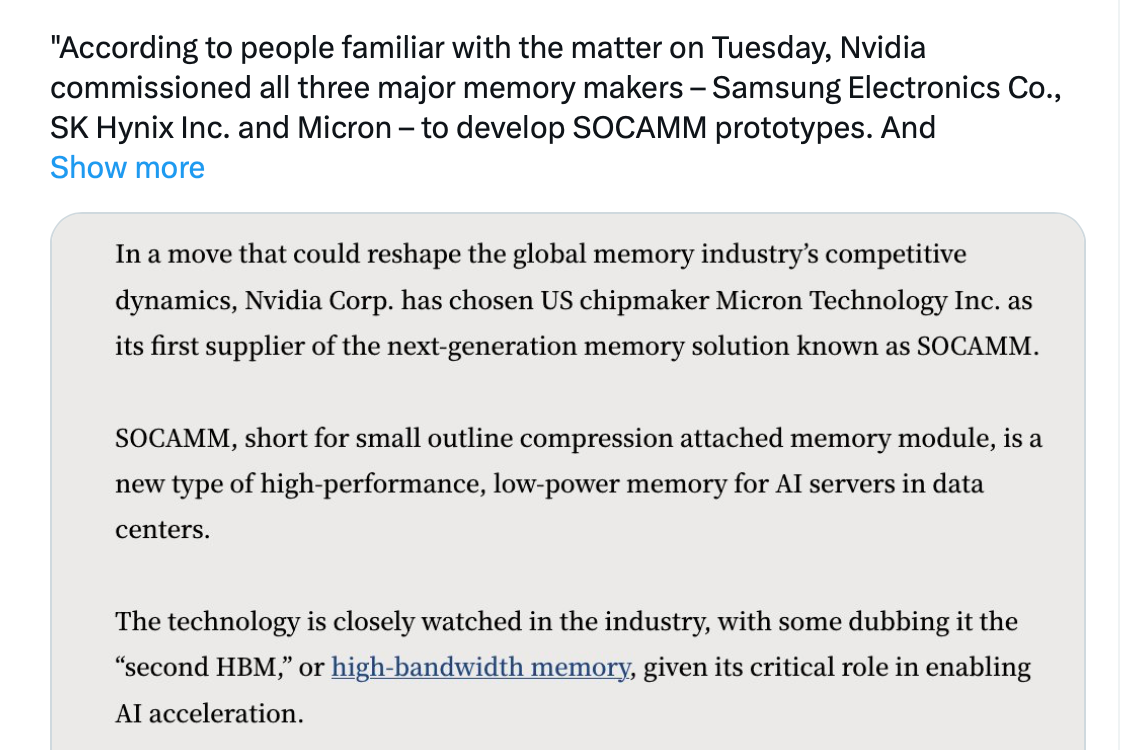

Amid the intensifying HBM race, Micron has secured a spot with its HBM3E 12H designed into NVIDIA’s GB300. Notably, according to its press release, the U.S. memory giant is also the only company shipping both HBM3E and SOCAMM memory for AI servers, reinforcing its leadership in low-power DDR for data centresAccording to the Korean Herald, Micron has surprised the industry as it announced the mass production of SOCAMM—dubbed the “second HBM”—ahead of SK hynix

Baird hiked its price target from $130 to $163, signaling growing conviction that Micron's high-bandwidth memory (HBM) chips are about to play a much bigger role in the AI boom. That sentiment is spreading fast. Rosenblatt now sees the stock hitting $200, and Wedbush, UBS, and others are sticking with bullish calls. Why? Simple: Micron isn't just riding the AI wave it's building the surfboard. HBM sales topped $1 billion last quarter, beating internal forecasts and jumping 50% sequentially. More importantly, demand is sold out for the year, and the TAM forecast for 2025 just surged from $20B to $35B.

Internally Micron today say they meet 9% of the HBM market of $20B(Dec 24) and already appear to be on an annual TTM of $4B and say they anticipate their market share to reach 25% of a $100B market. This suggests Micron could grown HBM sales from zero 12 months ago to $25B annually by 2030 effectively doubling their total revenue.

This is a classic-look at the game being played out not the score board!

HBM isn’t just important—it’s foundational to AI servers, acting as the high-speed circulatory system for data-intensive AI workloads. For a chip like “Feynman” with “huge amounts” of HBM, it will be the backbone enabling breakthroughs in model size, training speed, and inference efficiency. As AI servers evolve, HBM’s role will shift from critical to utterly indispensable, driving both technical and economic outcomes in the AI race.

This is why we invested in MU-the HBM is the iPhone moment

-

Micron is making great progress with its HBM3E memory, a super-fast type of DRAM used in AI and high-performance computing.

They’re producing two versions: a 12-layer (12-Hi) version that holds more data (36GB) and an 8-layer (8-Hi) version (24GB). Micron says they’re getting better at making the 12-Hi version faster than the 8-Hi, which means fewer mistakes and more reliable production.

Originally, they planned for the 12-Hi to become their main product later in 2025, but now they expect it to take over by the third quarter of 2025 (July-September). This is a big deal because customers, like companies building AI systems, love the 12-Hi for its speed (over 1.2 terabytes per second) and 20% lower power use compared to competitors.

Micron’s also doing better than expected this quarter because memory prices are strong and the market for another type of memory, NAND, is stable. Looking ahead to 2026, they’re talking with customers about making even more 12-Hi memory and getting ready for HBM4, the next generation that’s faster and holds more data .

This puts Micron in a strong spot to compete with rivals like SK hynix and Samsung.

-

Samsung announce delays in qualification of HBM3E memory, pushing back their production until at least Q4-an excellent update for Micron which is in mass production.

-

Micron Technology announced it has begun shipping HBM4 36GB 12-high memory samples to key customers, with NVIDIA, the leading AI chipmaker, likely at the forefront. This is a significant milestone for NVIDIA’s Rubin GPU, set to launch in early 2026(I think sooner unofficially), which relies on HBM4’s massive bandwidth to power next-generation AI workloads. Each Rubin GPU package will pack 288 GB of HBM4 across 8 stacks, delivering a blistering 13 TB/s of memory bandwidth to handle large language models and complex reasoning tasks.

Compared to Micron’s HBM3E, HBM4 is a leap forward, offering over 60% better performance and 20% improved power efficiency. Some perspective. A full rack will contain > 20TB of HBM4 and can handles 900TB/sec in bandwidth -can't quite get my head around that number.This early sampling signals Micron’s readiness to support NVIDIA’s aggressive Rubin timeline, reducing supply chain risks and will be a strong growth vector for Micron.

-

Micron is on a roll.......

To think the recent correct was gifting MU stock at $61 (April 4). It's a great case study in the tried and tested strategy of buying quality based on evidence (which was steering us in the face) and holding long term.

-

-

Micron report on the 25th

Wall Street expects them to post a quarterly EPS of $1.61 on revenue of $8.85B.

I think they will almost certainly beat this with revenue circa $9.1B and up to $1.75 imo

-

Write up tomorrow. 9.3b and 1.91. The guide was high in the extreme. 2.35-2.60 and 11b. This is quarter to quarter remember. Brilliant result well ahead of the highest expectations. Bodes very well for the related sectors.

They blew it away and guided ‘off the charts’

-

Here are Microns results plus highlights from their post earnings conference call. Top line growth , EPS growth driven by margin expansion. Could not have asked for a better report card!

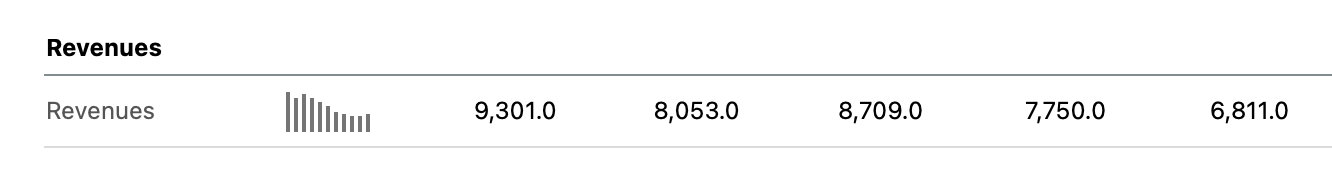

Record Q3 revenue of USD 9.3 billion, up 37% YoY and 15% QoQ, driven by robust demand for DRAM and NAND products.

Earnings per share (EPS) of USD 1.91, surpassing analyst expectations of USD 1.59 (20.13% surprise), up 200% YoY and 22% QoQ.

Gross margin improved to 39%, up 110 basis points QoQ, reflecting optimised pricing and cost management.

Free cash flow reached over USD 1.9 billion, the highest in six years, indicating robust financial health.

Data centre revenue more than doubled YoY, reaching a record level, fuelled by AI-driven demand.

High-bandwidth memory (HBM) revenue grew nearly 50% QoQ, exceeding USD 1 billion, with HBM sold out for calendar 2025.

Technology and Product Leadership:

Record DRAM revenue with the 1-gamma DRAM node (using EUV) offering 20% lower power, 15% better performance, and over 30% improvement in bit density compared to 1-beta DRAM.Gen9 NAND node is the industry’s fastest TLC-based NAND, with disciplined ramp-up to balance supply and demand.

HBM3E offers 20% lower power consumption than competitors’ 8-high solutions, with 50% higher memory capacity and industry-leading performance. HBM4 is expected to ramp in 2026 with over 60% bandwidth increase.

Leadership in low-power (LP) memory for data centres, reducing memory power consumption by over two-thirds compared to D5, with a transition to SOCAMM form factor planned.

Strategic Positioning and Investments:

Well-positioned for AI-driven demand, with CEO Sanjay Mehrotra emphasising Micron’s role in the “transformative era” of AI.Significant investments in U.S. manufacturing and R&D, including a new HBM advanced packaging facility in Singapore, to support future growth.

Achieving share gains in high-margin product categories, strengthening customer relationships.

Key Financial Metrics (Q3 2025) with YoY and QoQ Changes, Plus Q4 2025 Guidance

Revenue: USD 9.3 billion

YoY: +37% (from USD 6.8 billion in Q3 2024)QoQ: +15% (from USD 8.1 billion in Q2 2025)

Earnings Per Share (EPS): USD 1.91

YoY: +200% (from USD 0.64 in Q3 2024)QoQ: +22% (from USD 1.57 in Q2 2025)

Gross Margin: 39%

YoY: Not explicitly stated, but significantly improved from negative or low margins in Q3 2024 due to market recoveryQoQ: +110 basis points (from 37.9% in Q2 2025)

Free Cash Flow: USD 1.9 billion

YoY: Highest in six years, specific YoY change not providedQoQ: Significant increase, specific QoQ change not provided

Q4 2025 Guidance:

Revenue: USD 10.7 billion (±USD 200 million)Gross Margin: 42% (±50 basis points)

EPS: USD 2.51 (±USD 0.10)

-

Talking heads don't know what they are talking about.:) The stock is at its multi month high-most likely derivatives out of the money calls-option writers don't need the hedge(the shares) so sell them. No concerns here. It's a cyclical business yes but this cycle is going to last a very long time so I would say it's secular plus other memory segments which have been depressed due to consumer electronics being flat can only get better. The fed PE is about 11X which is dirt cheap imo.

-

I see we had another “expert” making noises yesterday that the second half business will be poor for Micron

-

every quarter will be better than the last. Micron will double revenue and earnings over the next 4-5 years.

-

Today Micron updated the market on its current quarters guidance and the stock is up in pre market trading.

Micron has today revised its projections for revenue, gross margin, operating expenses, and earnings per share (EPS) for the fourth quarter of fiscal 2025, concluding on 28 August 2025. Previously, the Company forecasted revenue of $10.7 billion ± $300 million, non-GAAP gross margins of 42.0% ± 1.0%, and non-GAAP EPS of $2.50 ± $0.15 for the fiscal fourth quarter.The Company has now updated its outlook for the fourth quarter of fiscal 2025, projecting revenue of $11.2 billion ± $100 million, non-GAAP gross margins of 44.5% ± 0.5%, and non-GAAP EPS of $2.85 ± $0.07.

This revised forecast reflects improved pricing, particularly for DRAM, and robust operational performance. Basically HBM sales are surging significantly. And I think they are still being conservative, $3 EPS? Maybe

Curiously it popped on Friday so someone knew this was coming. If todays pre market pop holds this would amount to a 15% gain in the last week.

The market has been wrong about MU consistently -thinking Samsung would dominate, however Samsung has struggled to obtain certification from Nvidia due to heat management and following that issue through the news over time, one could deduce that other players would gain share. That's exactly what happened.

Historical Q revenue. Now > $11B and its PE is actually about 10- Looks good value particularly given the growth rates.

-

Great news ….i wonder what that expert will be writing about this week after his views from a few weeks ago ….

-

Further, yesterday Micron's Chief Business Officer said the following. Basically they are 'sold out' until Dec 26.

What companies like Micron do is the bulk of the product will now be under contract. Micron will reserve some inventory to also sell on the spot market at potentially, much higher prices and of course they will likely increase yield through efficiency.

HBM4 is a key component in Rubin architecture (Blackwell-Next)