Nvidia News

-

As you can see with best case scenario 7M chips available in 2025 and 10M in 2026, they will be materially constrained for 'years'. NB: This is GPU demand not compute demand. TPU/Asics is additional. AMD and Intel have about 6% of the market-this won't change simply because CoWoS capacity is the bottleneck and Nvidia own the supply(70%). Second, AMD chips can't be scaled(as discussed), so anyone suggesting they are a threat, simply doesn't understand why Nvidia is so dominant. TPU/Asics are very good at one task(as are AMD/Intel chips) . Nvidia systems are very good for all tasks because they are programable. It's that simply. Nvidia is, and this is not just my opinion, it's industry opinion, 5-10 years ahead of AMD. That may as well be 100 years in this game.

-

It's also a reasonable assumption that the above numbers will get bigger because Stargate won't be allowed to go unchallenged.

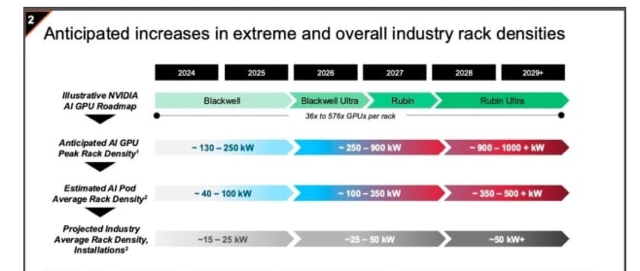

Knowing the above, how does the industry catch up to demand and how are all these racks going to be deployed.Firstly through chip architecture evolution. Rubin and Rubin-next(internally called 'X'.

Now, take a moment to look at this chart. Today, the biggest racks hold 72 GPU +36 CPU and are circa 150KW. Now look at the chip evolution. Blackwell ultra is arriving in 6 months or sooner and Rubin 2 quarters after. 100s of chips per rack and near term 250-300-400 KW. What will be needed to make this work? I wonder. DLC.

If we look at the supply of 5M-7M chips near term that’s (div by 72), 70k-97k racks. The global capacity today at the ai level/DLC is no more than 50k racks with more using air cooled solutions. So when we suggest anyone supplying dlc has a captive market, competition doesn’t matter right now. It’s a vendors market. If one company wins a project it only reduces their ability to meet the needs of the next customer. My point is simple-if you want compute you will have to engage with SMCI. Demand will be met by a combination of expanding rack scale manufacturing capacity plus increased rack density. Liang said during the last update that they are working on a 250KW solution for a ‘special customer’. It’s either Xai or Meta. Follow the crumbs. Ignore the ill informed-who btw will never provide any evidence based support rather continue to repeat statements already debunked.

Right now we sit and wait for silicon supply to ramp, safe in the knowledge it will get there soon enough. So all the nail biting about quarterly results should be put into perspective. 50b or 60b in one quarter-sure I know which is preferred but it will come regardless and the lumpy timing is not in anyway a detraction, more a distraction. We are not looking at ‘lost’ revenue or income years apart-it’s all near term

-

Oracle reported Q3 earnings last night. The interesting parts being their backlog of service contract obligations. Plus $46B this 'year'(q3 to Q3)-a current backlog of $130B. A staggering number. Oracles has gone all-in on AI and has an advantage over its competitors due to its legacy enterprise ties(databases and ERP). Their networks are faster too.

The company also mentioned that 'component delays'(clearly GPU) which slowed cloud expansion, should ease in July. This ties in with everything else we have heard so it's great to get more weight to the thesis that volume supply will be hitting the decks very soon and demand is extreme. It also shuts the door on the shills talking about monetising AI. I can see 130 billion reasons why that is nonsense. It won't stop them repeating it!

-

Nvidia CEO Jensen Huang was interviewed yesterday. Here is what he said.

Tariffs impact will not have a meaningful impact in the medium term and long term we will be sourcing most of our components in tariff free regions.

The market is completely wrong about our growth. Countries are awakening to the need to treat their sovereign data as a national resource. All companies are scrambling for AI factory token generation. Those that don't will become inefficient.

quote:

'All analysts forecasts have it wrong-are you guys listening, are you following along? None of the $1T capex spend(annually by 2028, massive in its own right) takes account of the massive AI Factory and Sovereign AI build out. Do you understand what I am saying? I say that because no one is even close. I see multiple hundred billion Capex projects that are coming online, it's not in any data centre forecast, yet. Are you guys paying attention. We are booking these are we speak. Pretty soon they will wake up. There is no doubt in my mind that out of $120 trillion global industry market that a very large part-many trillions of dollars will be in AI Factories. There is no question in my mind. Industry want to manufacture intelligence and we build those factories. This segment is completely unaccounted for to date. And it is the largest layer, larger than total data centre. The market today is focussed on large CSP (AWS/Azure etc) but I think very soon, starting err now, you will start seeing an AI Factory built out which is completely independent to CSP capex. We are working on some very big AI Factory projects. 100s of billions.And on competition-if your chips are not better than Hopper you may as well just give it away (quality dig there). And for us, every GW of DC is $50B revenue. When we are working on 5GW projects, you can do the math right? So any new DC is a massive investment-you won't be choosing anything but the best systems because the risks are too high otherwise. We are the best in ALL DC areas, not just the chip.

Of course the usual crowd would say, 'CEO pumps his company, shocker' but Huang is ultra conservative.

I've said it many times before. This company is just getting started.

-

Very interesting as always Adam

I did see a couple of reports this morning from so called market experts that there was nothing to excite the market from Nvidia….perhaps they were not listening or thought he was talking crap as per your last comment -

Nothing to get excited about-

ok .Well put them on ignore

ok .Well put them on ignore

-

Nvidia (NASDAQ:NVDA) will open a quantum computing research lab in Boston which is expected to start operations later this year.

The Nvidia Accelerated Quantum Research Center, or NVAQC, will integrate leading quantum hardware with AI supercomputers, enabling what is known as accelerated quantum supercomputing, said the company in a March 18 press release.

Nvidia's CEO Jensen Huang also made this announcement on Thursday at the company's first-ever Quantum Day

-

SK Hynix announce mass production of HBM4 early. Originally scheduled for '2026' the company originally brought forward production to December 2025 but now state a time frame of 'mid-to-late' 2025 (October). Interesting, given there is only one customer and one chip designed to use it. Nvidia Rubin. Huang stated last week that Rubin would be available 'mid 2026' however we did discuss an early launch a couple of weeks ago and this is strong supporting evidence of 'early Rubin'. There is no need for HBM4 otherwise.

The Rubin architecture is a game changer in terms of power and efficiency and sets the industry on a path to 600Kw server racks. Not to mention ASP of said racks going beyond $10 Million each! Remember 'rack capacity ' is key and DLC is a must have for GB300(Blackwell Ultra) and of course Rubin which in future will be named the R100/R200 and VR200. The back end of 2025 is looking interesting.

-

Just a quick one.

News of China going all green and restricting ai chips because they are power hungry

New from Alibaba 'why is the US spending so much on AI'and some people fall for it-pretty much all I have to say. The biggest polluter on the planet-the biggest builder of Coal fired power plants has concerns over power use. And Alibaba who can't access enough US silicon and is far far behind says 'it's not needed;.

China don't buy a lot of US chips. a few billion. Every chip not bought by china will be sold to someone else. Banning all chips to china won't have much impact. Not now or in the future because the company can only produce so much and has 10X demand for that limited supply.

-

Fact. The CCP is pushing Hauwei (poor chips), the ascend 910C. It is far less efficient than the H20 in terms of operations per watt. So the 'don't buy US chips' because they hurt the planet is utter nonsense.

-

Just a quick one.

News of China going all green and restricting ai chips because they are power hungry

New from Alibaba 'why is the US spending so much on AI'and some people fall for it-pretty much all I have to say. The biggest polluter on the planet-the biggest builder of Coal fired power plants has concerns over power use. And Alibaba who can't access enough US silicon and is far far behind says 'it's not needed;.

China don't buy a lot of US chips. a few billion. Every chip not bought by china will be sold to someone else. Banning all chips to china won't have much impact. Not now or in the future because the company can only produce so much and has 10X demand for that limited supply.

@Adam-Kay said in Nvidia News:

The biggest polluter on the planet-the biggest builder of Coal fired power plants has concerns over power use.

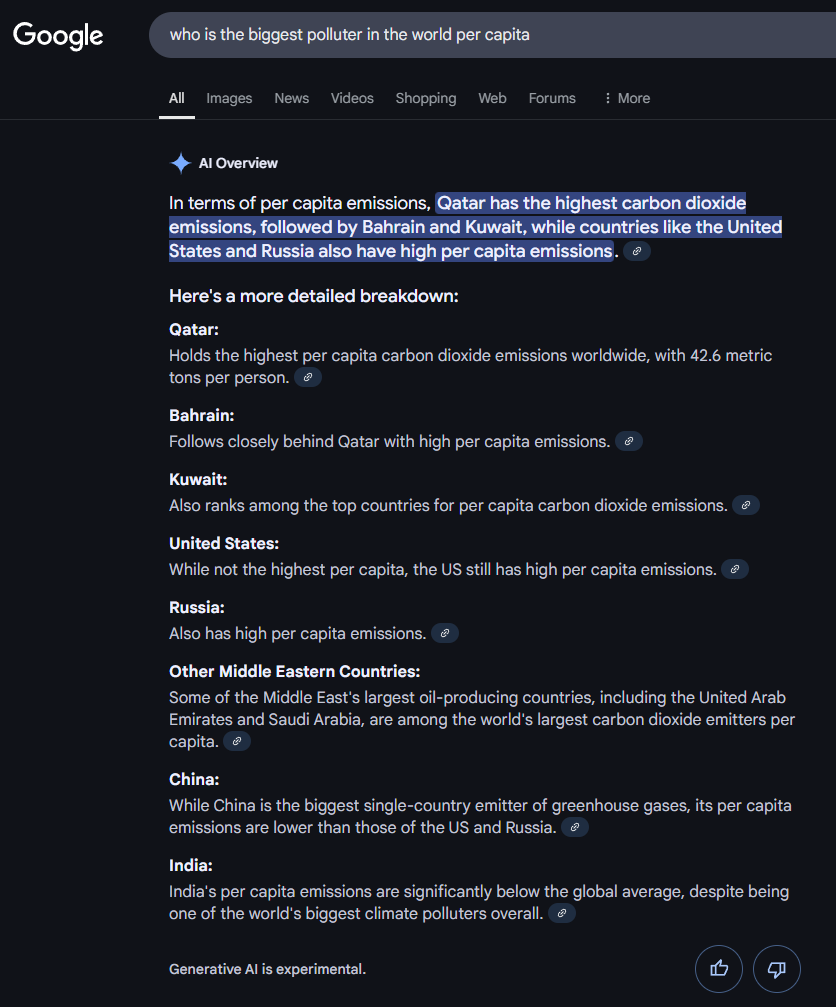

If you take the country as a whole, then China is the biggest polluter, followed by the US, which generates about half of what China does. But that's not really looking at the whole picture is it?

If you use pollution level per capita, which is a far better way to look at it, then the whole picture changes drastically.

China population 1.411 billion

US population 340.1 millionAI tells me the biggest polluter per capita is Qatar, with China some way down that list. Per capita the US is a bigger polluter than China, and likely to get bigger given Trumps drill baby drill stance.

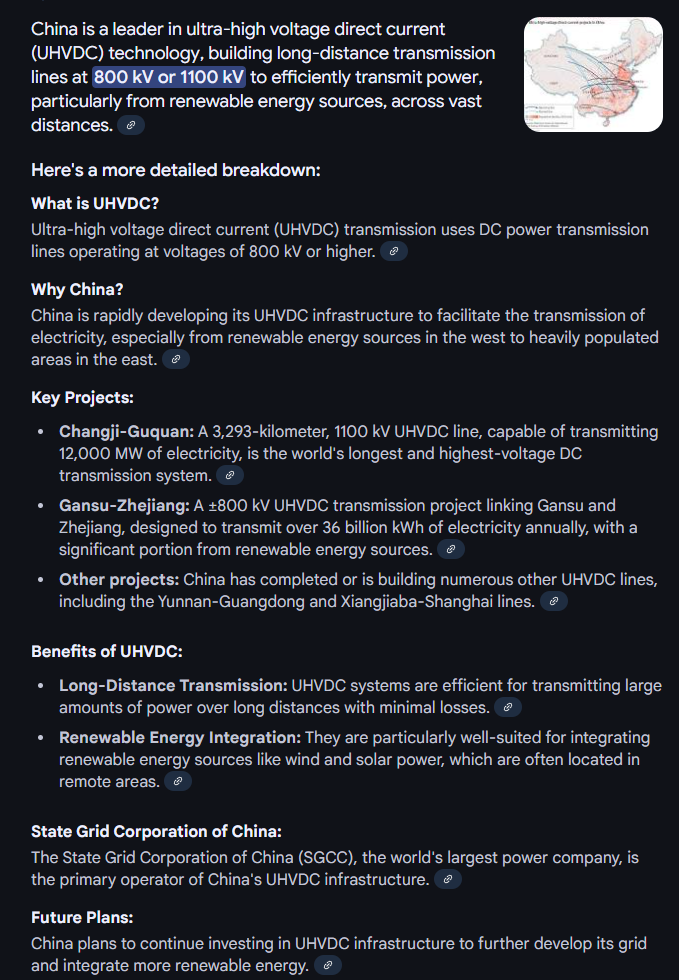

China actually leads the world in PV generation, and ultra high DC long distance transmission lines to move that power where's its needed. China apparently has 393GW of PV accounting for 37.3% of global PV capacity.

-

Any country with a huge pool of slave labour ( in the literal sense of the word ) could dominate a low cost high volume infrastructure.

To borrow one of the words.... " apparently" Chinese internal media which is highly circulated by their wide network of paid shills , can also turn out a load of utter bollox statistics too .Just type in the question another way and the MI ( Moron Intelligence) engine spits out different rubbish

-

Staying within the chip power restrictions relating to approved China exports, Nvidia has updated its Hopper (H20) variant to the H20E, with E denoting use of HMB3(E) memory. The chips will enter mass production next quarter. The H20E. The H20E will be even more efficient due to the use of lower power HBM(3E) it's basically a nerfed Hopper H100 and will be priced at around $17,000 each. Nvidia would likely sell between 1.2 and 1.5M of these chips in China in 2025 and it outsells Huawei Ascend chip 2:1 which is the best the chinese can produce and still not really compete with a neutered old architecture, the H100.

Here is a great example of efficiency and TCO (total cost of ownership). A 17k chip vs a 50k chip (blackwell).

Blackwell uses 1/12th the power per operation and is literally 100X faster when inferencing. It's $1.7M on H20 or $50K on Blackwell for the same performance.

You're flogging a dead horse with old architecture. It also hows you just how far behind China are.News that China has 'cracked the code' Deepseek is completely fake, evidenced by the fact they didn't use Huawei silicon, they used illegally sourced US spec Nvidia silicon.

-

In 2024, analysts estimated NVIDIA shipped around 1 million H20 chips to China, generating over $12 billion in revenue, according to Reuters. The H20, priced between $12,000 and $15,000 per unit, became the go-to AI chip for Chinese firms like Tencent, Alibaba, and ByteDance after U.S. export controls banned more advanced models like the H100 in 2023. This $12 billion figure aligns with NVIDIA’s fiscal 2025 China revenue of $17.11 billion (including Hong Kong), though that total covers all products, not just H20s. This fig reconciled to their 10-k disclosures

For 2025, demand has spiked further. The Information reported on April 2, 2025, that Chinese companies ordered at least $16 billion worth of H20 chips in just Q1 (January–March), driven by fears of tighter U.S. restrictions.

The China story has been done to death. Sell -they’re losing China has been the call for almost 2 years’. Every year China business grows.

The H20 is receiving an upgrade to the H20e for June time frame.the same performance just better efficiency.

-

If this extra tariff from China stays ….surely the chips they were getting if any would be diverted and snapped up by US companies

-

Whilst we can never predict short term price movements, the current price is ridiculously cheap imo. On fundamentals it is 40% cheaper than Coke which has no growth.

But there is a lot of confusion out there. I was asked yesterday 'what about other countries retaliating with tariffs on the U.S. What they meant was, how would Nvidia deal with a tariff from another country. I explained that Tariffs are attached to the country of origin, not the country of ownership. 85%+ of the built up cost of Nvidia hardware is Taiwan and non US countries so they don't apply. However the media is spinning the story to stir up fear.

If you take a longer term view the current price is an opportunity to be considered.

-

It’s mad…..didn’t Coke go up yesterday

-

The Trump Administration is apparently pausing the implementation of an export ban to China for Nvidia's H20 GPU

The H20 is the most advanced chip offered by Nvidia that is still allowed to be exported to China. Industry observers and Chinese tech companies had expected the H20 to be added to the list of other banned hardware.

Why? Simple. The H20 is significantly less powerful than even the Hopper H200 and the Blackwell chip leaves the Hopper in its dust. It's a positive that the Govt are avoiding anything that would impact(negatively) their most important company.